EOPEN User Guide¶

Abstract

This document is the EOPEN User Guide. Its purpose is to describe the features and the capabilities supported by the EOPEN User Portal. The content of this document is part of the result of the work performed on T6.2 “EOPEN System Integration” and T6.4 “Interactive real‑time user‑friendly visualisation”.

Version |

Date |

Reason |

Revised by |

Approved by |

|---|---|---|---|---|

3/5-Aug-2020 |

Internal review |

Gilles Lavaux, Valentina Faccin, Gabriella Scarpino (Serco) |

||

1.0 |

19-Aug-2020 |

First release |

Authors |

Leslie Gale |

Organisation |

Name |

Contact |

|---|---|---|

SpaceApps |

Bernard Valentin |

|

SpaceApps |

Hakim Boulahya |

|

SpaceApps |

Leslie Gale |

|

CERTH |

Stelios Andreadis |

This deliverable presents the final result of the work performed on the “interactive real‑time user‑friendly visualisations” task. This result corresponds to the software integrated in the third and final release of the EOPEN Platform.

The objective of the EOPEN User Portal is to provide to end‑users means to search for and visualise the data available in the EOPEN Platform in the most appropriate manner. The available data may be “proxied” (thus fetched in real‑time from external sources such as FMI), may have been manually or automatically ingested as it is the case of weather forecast and tweets data, or may have been generated within the platform as it is the case of water body masks and rice paddy fields maps.

The EOPEN User Portal is meant to be as generic as possible. It integrates a series of System Dashboards that are pre‑configured to display different types of data of interest whatever the use case. It also provides a series of graphical components that may be used to create custom dashboards.

This document describes how users may use the EOPEN User Portal to find and visualise data but also how to create new dashboards to better meet their needs.

How to access the EOPEN Platform (User Portal)

The EOPEN User Portal described in this document is available at the following address:

https://eopen.spaceapplications.com

AOI |

Area of Interest |

API |

Application Programming Interface |

ASB |

Automated Service Builder |

EO |

Earth Observation |

EOPEN |

opEn interOperable Platform for unified access and analysis of Earth observatioN data |

ESA |

European Space Agency |

FSM |

Full Strip-Map |

FTP |

File Transfer Protocol |

GDAL |

Geospatial Data Abstraction Library |

GPT |

Graph Processing Tool |

GUI |

Graphical User Interface |

HTTP |

Hyper-Text Transfer Protocol |

JSON |

JavaScript Object Notation |

OGC |

Open Geospatial Consortium |

S1, S2, S3 |

Sentinel‑1, Sentinel‑2, Sentinel‑3 |

SNAP |

Sentinel Application Platform |

SpaceApps |

Space Applications Services |

VM |

Virtual Machine |

WFS |

Web Feature Service |

WMS |

Web Map Service |

WPS |

Web Processing Service |

Introduction¶

This document describes the features and capabilities implemented in the EOPEN User Portal integrated in the final release of the EOPEN Platform.

General Concepts¶

The EOPEN User Portal makes use of a few general concepts to refer to its building parts.

First of all, there is the Portal itself. This refers to the whole Web application. All the pages, menus, graphical components visible in the Web browser are considered as building blocks of the Web portal.

Besides a couple of special pages (including the “Home” page and the “About” page), the pages that are used to display data available in the EOPEN Platform are called Dashboards. Dashboards contain one or more graphical (web) Components which are used to search and visualise the data. Different filters and viewers are available to support the different types of data present in the platform.

The next chapter describes in details the available dashboards and components and explains how new custom dashboards can be created by selecting and arranging components as needed.

User Accounts / Authentication¶

The EOPEN Web Portal is accessible without restrictions. The displayed information and the dashboards created by the users are publicly accessible.

This is a temporary situation. The implementation roadmap foresees a merge of the EOPEN Web Portal with the Developer Portal. At that time the role-based access control already used to restrict access to the developers will also be applied to the end‑users who only need to access the Web Portal described in this document.

Configuration¶

As users are not yet individually recognised, the changes applied to the portal pages are shared and customisable by anyone.

The EOPEN User Portal¶

This chapter describes the user interface of the EOPEN User Portal. Next section gives an overview of the available pages and the main navigation links.

The remaining of the chapter describes the pre‑defined pages, the available components and the controls for creating and customising new pages.

Site Map and Page Layout¶

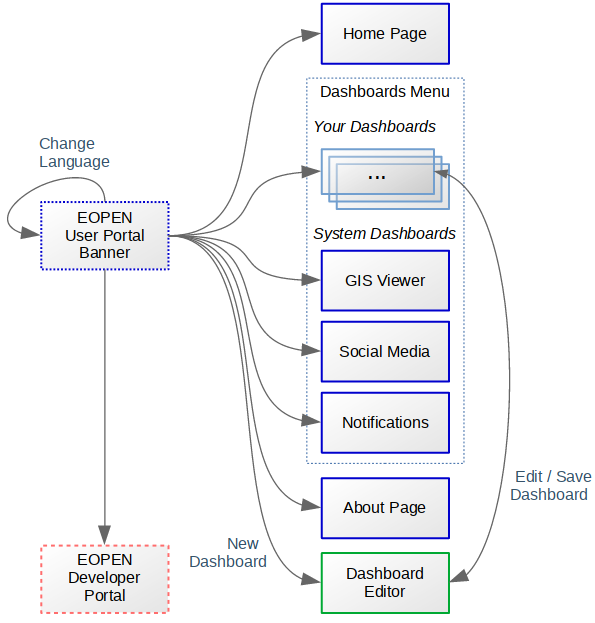

Figure 1, below, shows the structure of the EOPEN User Portal. Except the banner panel shown on the left, each box represents a Web page and the arrows show the navigation between the pages.

Figure 1 EOPEN User Portal Site Map¶

The EOPEN User Portal Home Page (https://eopen.spaceapplications.com) welcomes the visitor. The page body informs about the EOPEN project and provides a link to the Developer Portal, described in a separate document.

The page banner, included in all pages, contains the following elements:

The EOPEN logo that leads to the Home Page.

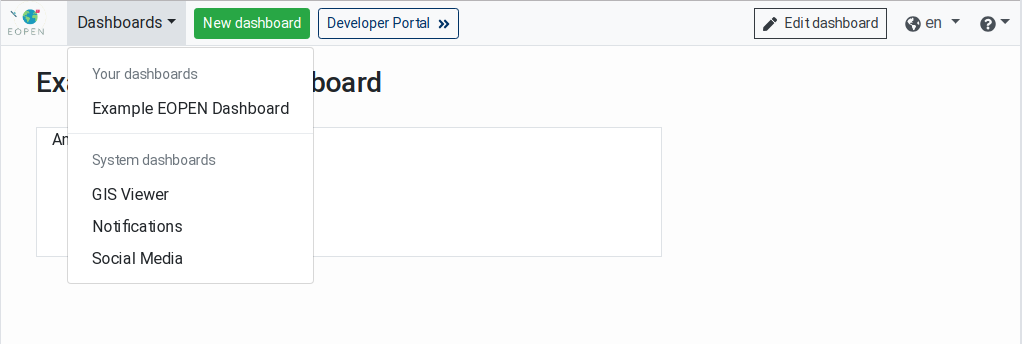

A Dashboards menu which lists the available dashboards grouped in two categories:

“Your dashboards” contains the dashboards created and configured by the users. In a fresh installation this category is empty.

Important: As the dashboards are currently shared among the users, pay attention not to modify a dashboard without the consent of its creator, if known.

“System dashboards” lists the built‑in dashboards. These are pre‑defined in the EOPEN Portal and are not editable.

The GIS Viewer dashboard allows searching and visualising geospatial

data on backgound maps. The GIS Viewer dashboard is described in

GIS Viewer.

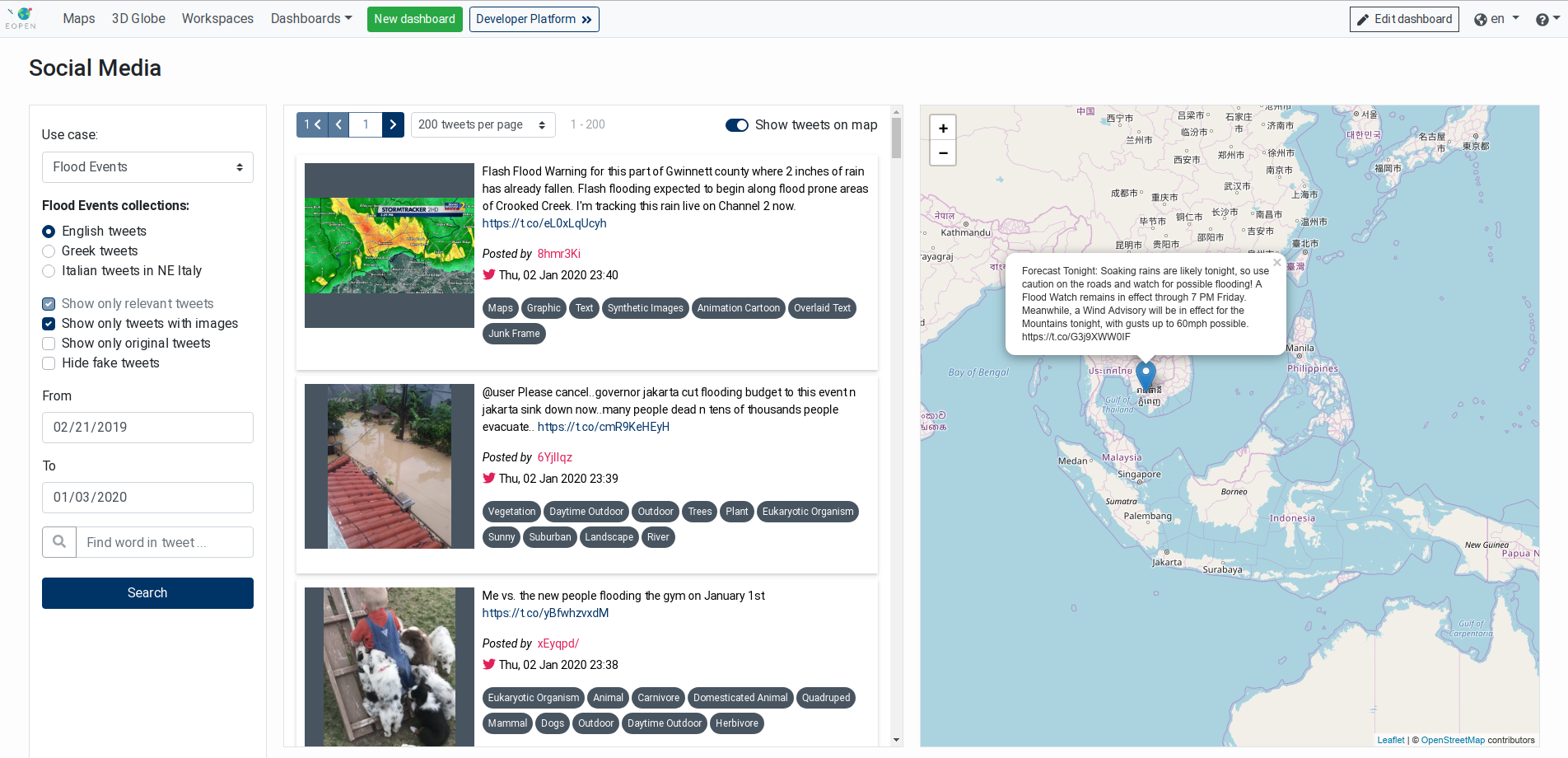

The Social Media dashboard allows searching, inspecting and

visualising collected Tweets on an interactive map. This dashboard is

described in details in Social Media (Tweets) Viewer.

The Notifications dashboard displays the messages issued by the

applications running in the EOPEN Platform through Mattermost channels.

The Notifications and Instant Messaging dashboard is described in

Notifications and Instant Messaging.

A New dashboard button that allows creating custom dashboards, as described in

User Authentication.A Developer Portal » button that leads to the EOPEN Developer Portal described in the dedicated EOPEN Developer Guide.

An Edit dashboard button is displayed on the right side and only when an editable Dashboard is selected. Click on this button to enter the dashboard editing mode, as described in

User AuthenticationA menu that allows selecting the display language. Supported languages are English (en), Italian (it), Finnish (fi) and Korean (ko, as shown on Figure 3).

A question mark icon leads to the About page. This page provides technical information about the portal including the list of the integrated third party data and software.

Figure 2 Home Page¶

The screenshot in Figure 3 shows how the Home page is displayed when the Korean language is selected.

Figure 3 Home Page (Korean Version)¶

System Dashboards¶

Pre‑defined pages already combine available components to provide general purpose features. These are introduced in the following sections.

GIS Viewer¶

The GIS Viewer page allows visualising the geo‑localised data available in the platform on interactive 2D maps and 3D globe. The page is accessed by clicking on the GIS Viewer entry in the Dashboards menu.

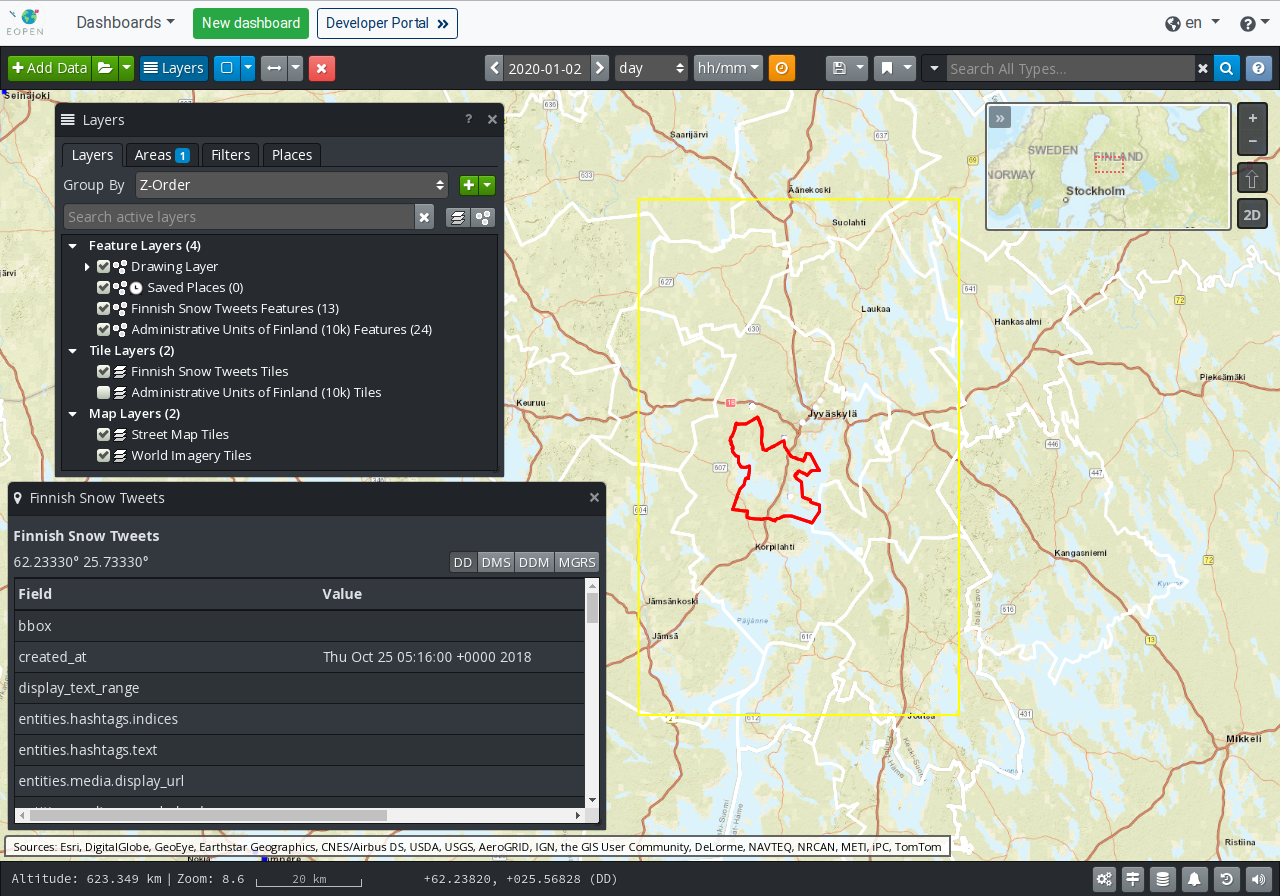

As may be seen on Figure 4, besides the banner at the top, the GIS Viewer occupies the entire page. The Viewer is implemented with the open‑source software OpenSphere whose technical information may be found at: https://opensphere.readthedocs.io/en/latest/. The tool includes embedded help information, accessible through the question mark button located in its upper‑right corner.

In this section we explain how data available in the EOPEN Platform may be selected and visualised on the map. The geo‑localised data is stored in a GeoServer instance pre‑configured in the viewer.

Figure 4 GIS Viewer¶

Proceed as follows to visualise data as layers on the map:

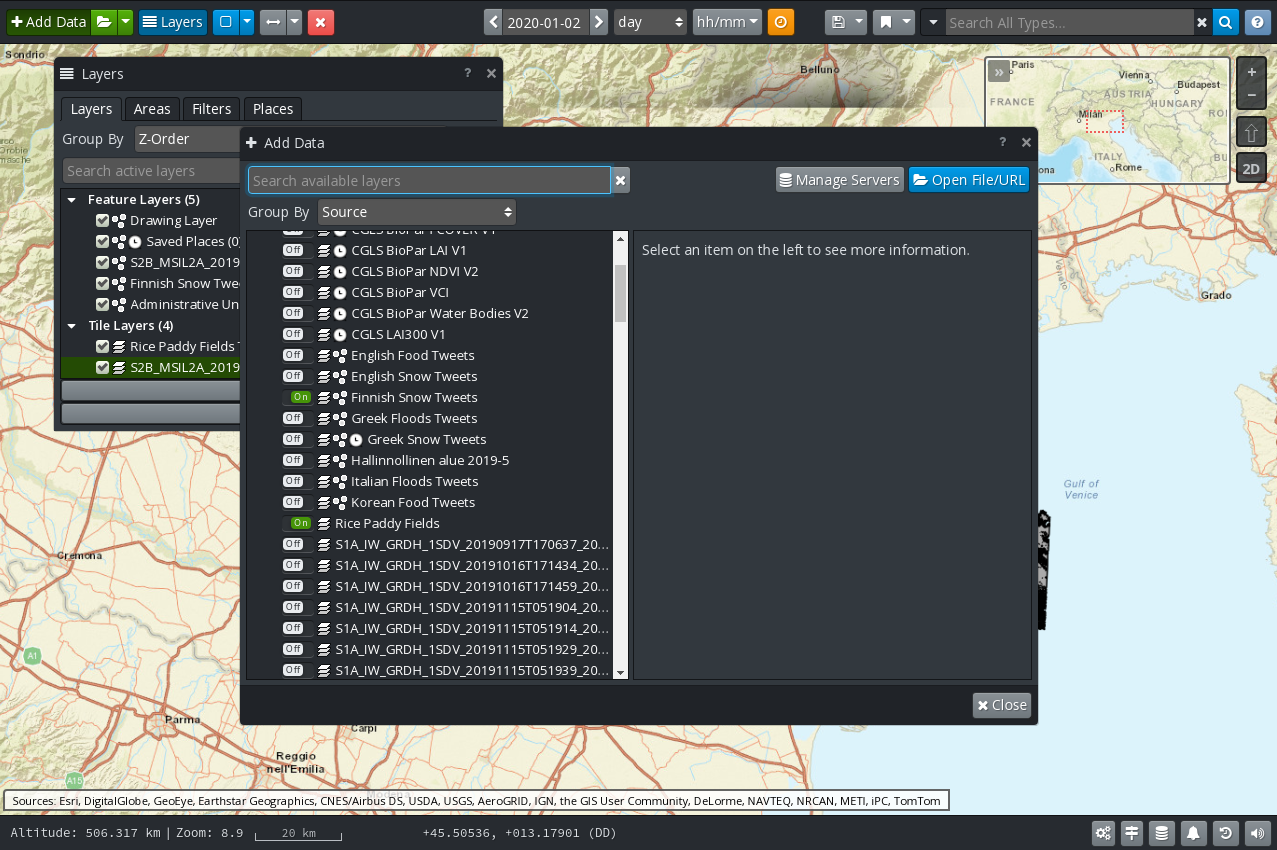

Click on the Add Data button located in the upper‑left corner of the viewer.

In the Add Data dialog box (Figure 6), identify and expand the “EOPEN GeoServer” source.

Toggle the switch next to the layers of interest. The changes are immediately applied. There no button to accept the changes. Click on the Close button when done.

If the Layers floating panel is not visible, click on the Layers button in the banner.

The Layers panel lists the selected Feature Layers, Tile (Raster) Layers and the (Base) Map Layers.

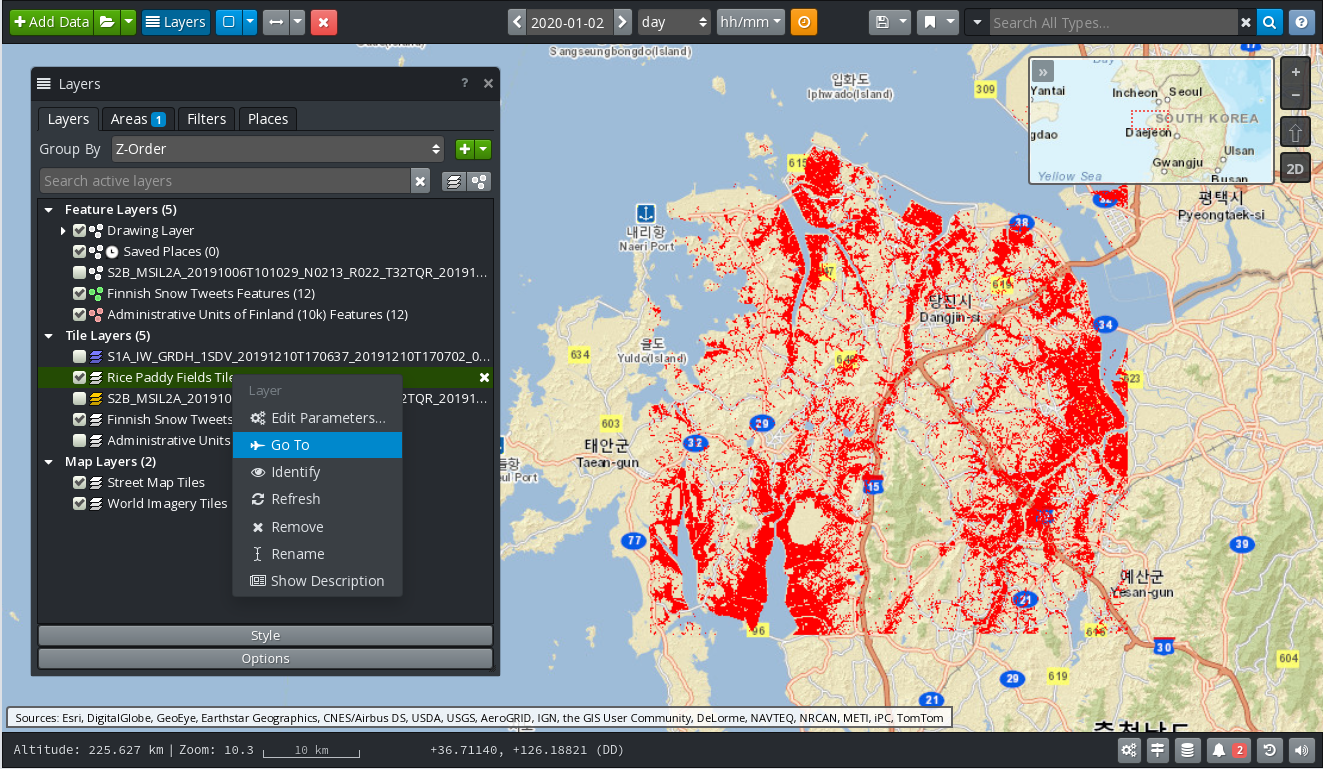

In order to focus the map on the data of a certain layer, select the layer in the list, right‑click on it to reveal a popup menu, then select the Go To entry in the menu. Figure 5 shows the map focusing on a water mask product.

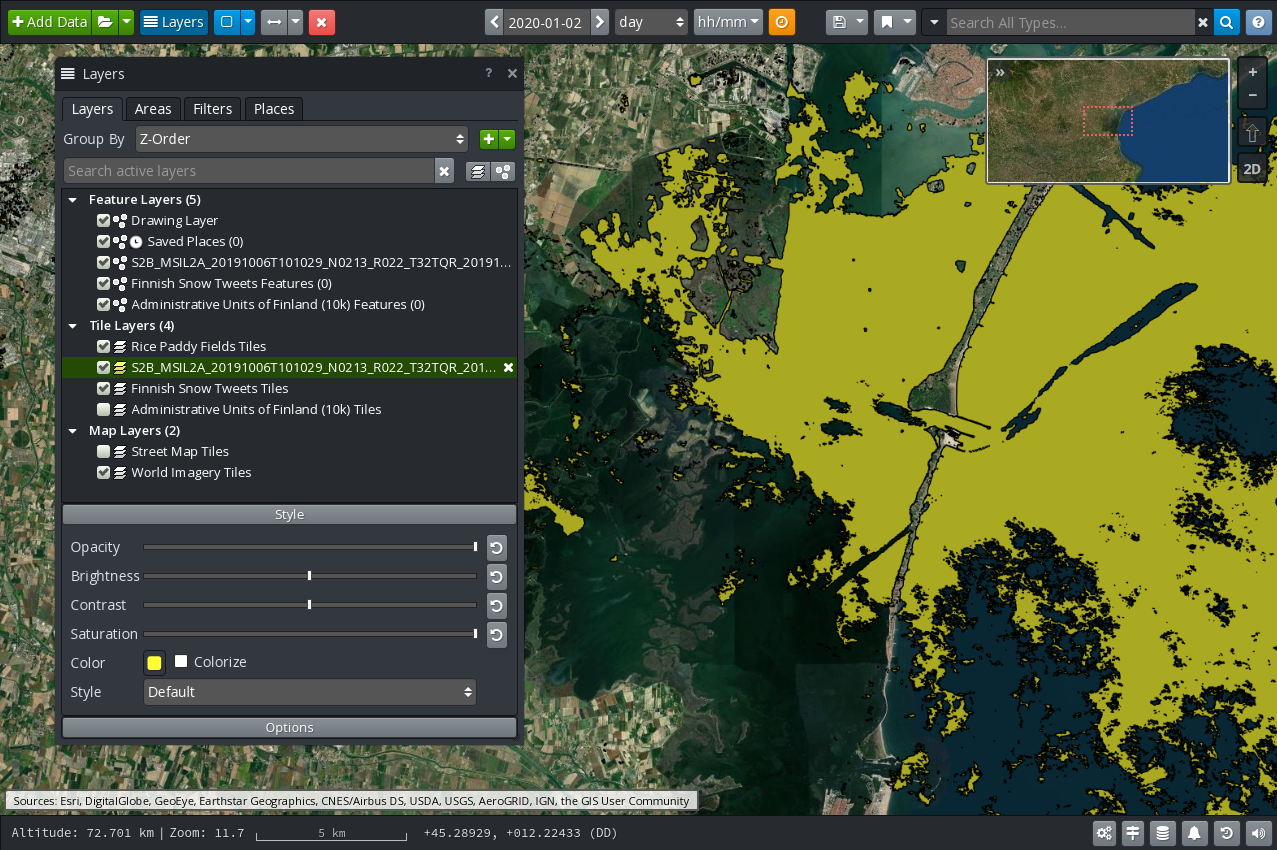

Figure 5 GIS Viewer: Go To Rice Paddy Mask Layer¶

Available Data

Geo‑localised data available in the EOPEN Platform include the data ingested and generated by the Pilot Use Cases. Examples are:

Water body masks (raster and vector) extracted from Sentinel‑1 and Sentinel‑2 scenes.

Rice paddy masks (raster, as shown on Figure 5)

Collected Tweets in different languages and matching each use case (vector/features)

Weather (temperature, wind, precipitation, …) forecast data (raster)

Administrative data from Finland (raster and vector)

From FMI ClimGrid:

Daily Snow Depth in Finland between 1961 and 2018, 10km resolution.

Daily Mean Temperature in Finland between 1961 and 2018, 10km resolution.

Figure 6 GIS Viewer: Add Data¶

Layers Panel

The Layers Panel contains 4 tabs:

Layers: Lists the pre‑configured and the selected layers, organised in three groups:

Feature Layers: Includes areas drawn on the map, and vector layers (i.e. served via OGC WFS)

Tile Layers: Includes the raster layers (i.e. served via OGC WMS and WMTS)

Map Layers: Includes the pre‑configured base maps: a Street Map and a World Map (satellite imagery) both served by ArcGIS Online.

The layers may be enabled and displayed using check-boxes and may be moved up and down with the mouse to control in which order they appear on the map.

At the bottom of the panel, an option area allows automatically refreshing the data at a regular interval.

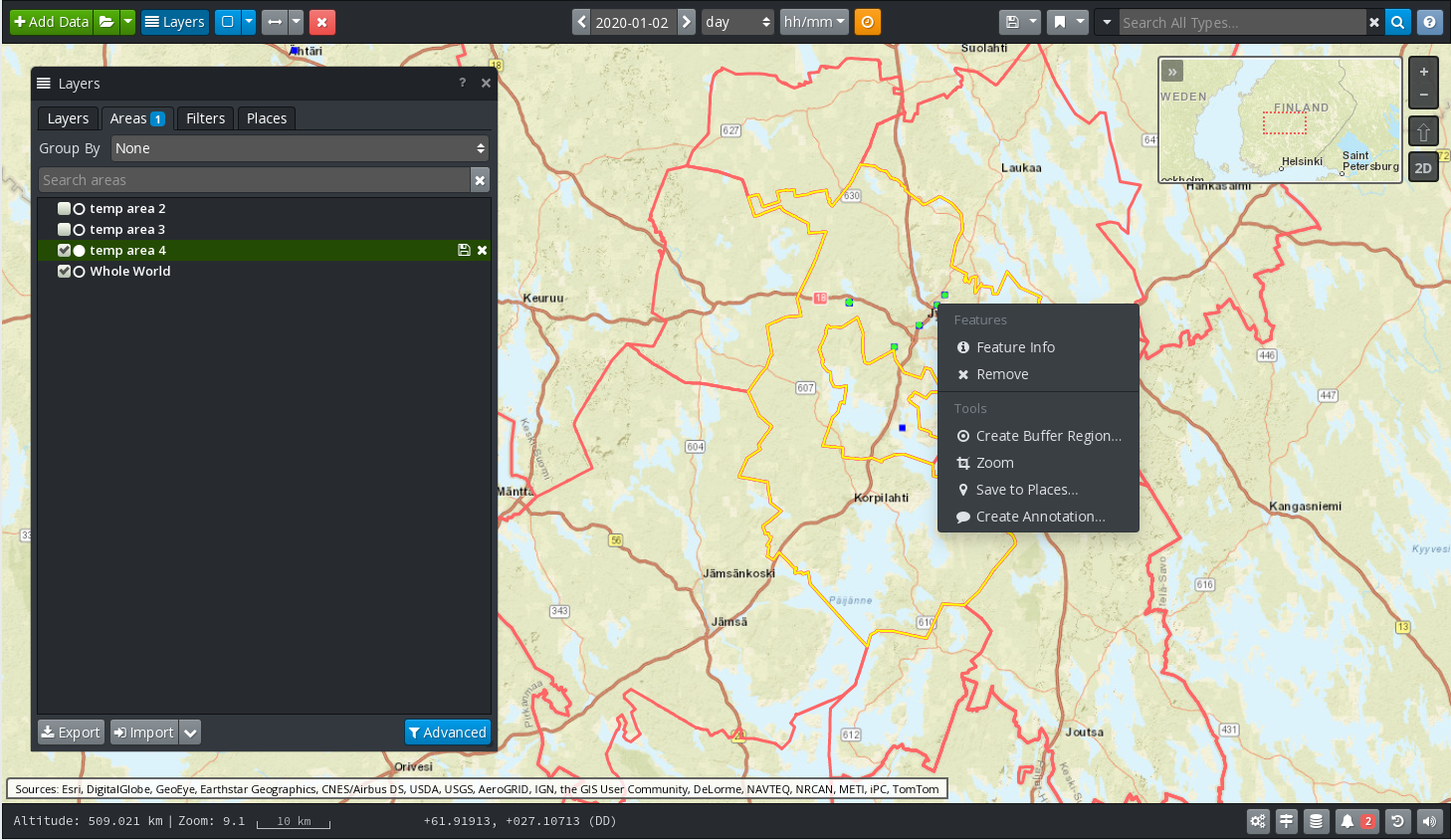

Areas: Lists the areas drawn on the map and the closed polygon features converted into areas. Areas may be selected to filter the features to be displayed on the map. This is further explained below.

Filters This tab allows creating and managing advanced filters by combining layers, areas and feature properties.

Places: This tab allows creating and managing custom locations.

Layer Styling

When a layer is selected in the list several buttons appear at the bottom of the panel:

A Style button appears. Clicking on this button reveals controls for customising the appearance of the layer on the map. As can be seen on Figure 7, available controls include the opacity, brightness and contrast as well as the base colour. If a Feature Layer is selected, the display size may also be adjusted.

If a Feature Layer is selected a Label button is also available. This allows selecting which feature properties must be displayed on the map and how (size and color).

Controls under the Options button allow configuring how often the layer will auto‑refresh on the screen.

Figure 7 GIS Viewer: Styling a Layer¶

Features Filtering

As mentioned above, the features, including vector data, must be selected using custom areas.

In order to search for features located in a bounding box:

Select one or more feature layers from the EOPEN GeoServer.

Click on the blue button in the GIS Viewer banner that shows a rectangle. By default, the selection has the shape of a rectangle. Expand the menu associated to the button to select other shape types.

When the tool is enabled, use the mouse to select an area on the map. A context menu appears automatically. In this menu select the Load entry. The selected area is added in the list of areas in the Layers panel and the features located in the area are automatically queried from the server and displayed.

It is then possible to select individual features and visualise their properties.

If a feature is itself a closed polygon it can be used to define a new area. To do this, select the polygon, open its context menu (click on the right mouse button) and select the Add entry.

Custom areas are managed via the Areas tab in the Layers panel. In particular, areas may be renamed, imported and exported, enabled and disabled, etc.

Figure 4, above, shows an administrative region located in Finland (red polygon on the map), used as an area for filtering Tweets about snow in the Finnish language. One of these Tweets has been selected and its information displayed in the floating panel located in the bottom left of the screen.

Figure 8 GIS Viewer: Feature Selection¶

This is only a short introduction to OpenSphere. Many more features may be discovered by using the tool directly.

EOPEN Extensions for OpenSphere

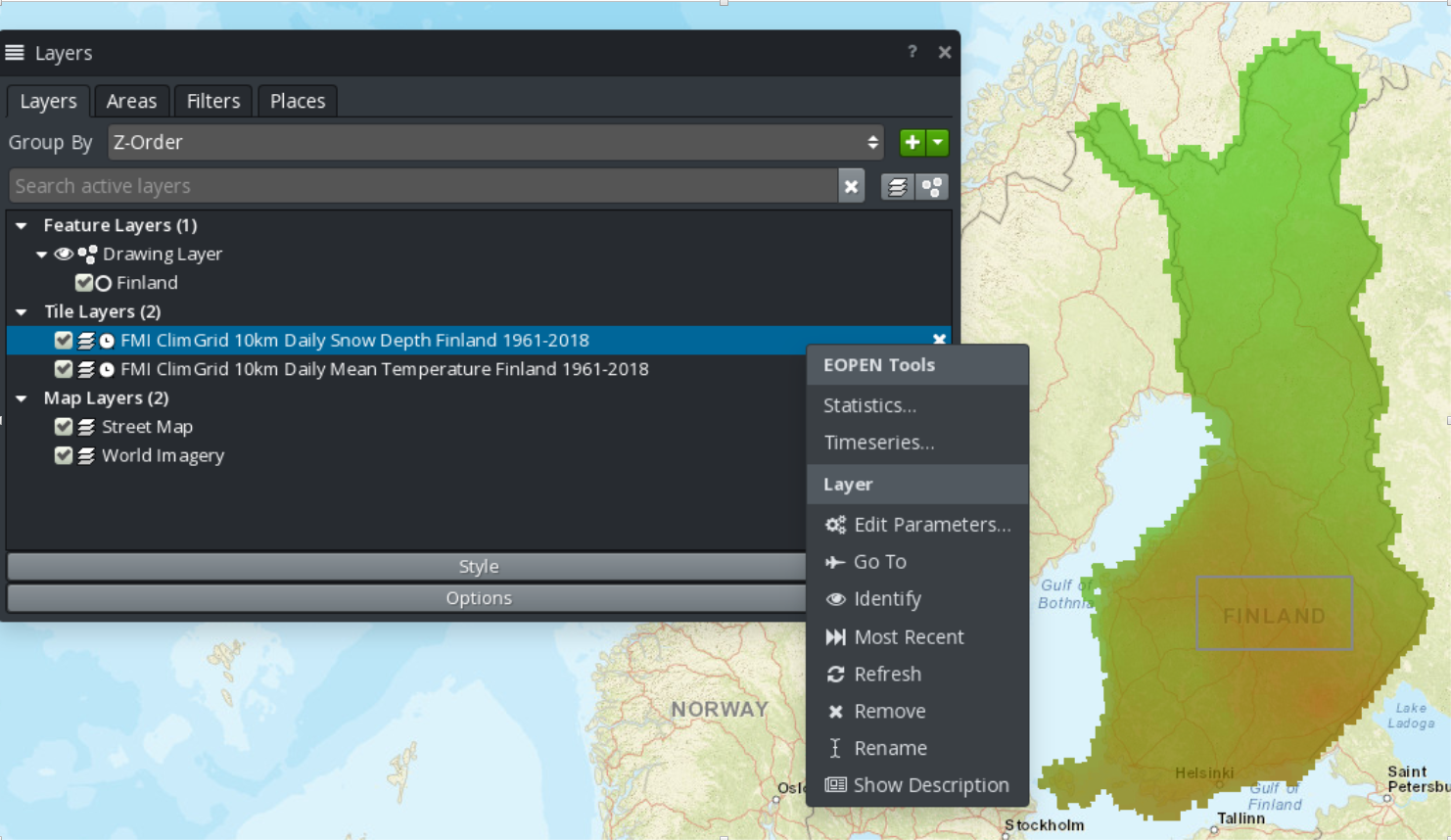

An extension has been developed that permits to trigger the execution of workflows in the EOPEN platform from within the GIS Viewer.

At the time of writing this document, the plugin supports two workflows:

The extraction of statistical information from FMI ClimGrid products

The extraction of timeseries from FMI ClimGrid products

To accomplish this, execute the following steps:

In the GIS Viewer, select an area of interest and give it a name. If you want to select or rename an AOI, check “Features Filtering” at the precedent item.

Select one or both of the FMI ClimGrid layers.

Right-click in the Layers panel and select either the Timeseries or the Statistics entry.

Figure 9 FMI ClimGrid Timeseries and Statistics: OpenSphere plugin¶

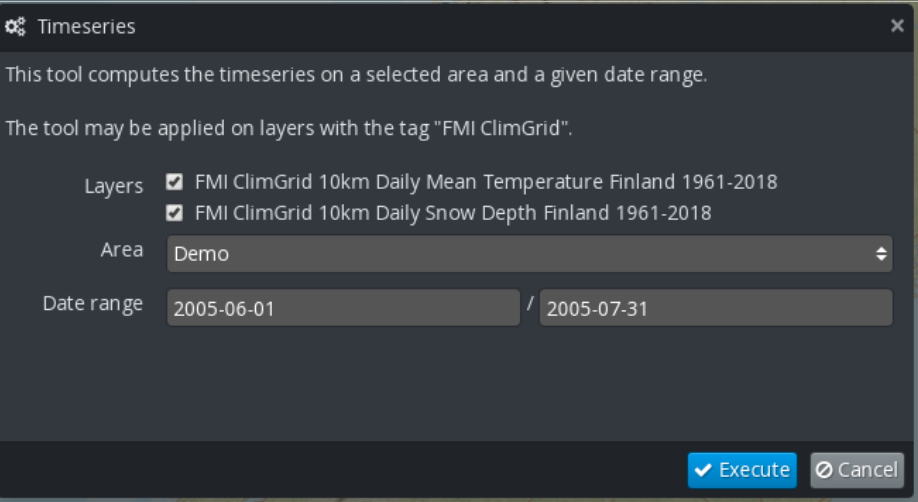

- The plugin displays a dialog box (Figure 10) that allows selecting the layer,

the area and a date range (or a single date for the Statistics execution). Click on the Execute button to trigger the execution of the corresponding workflow in the EOPEN Platform.

Figure 10 FMI ClimGrid Timeseries and Statistics: OpenSphere plugin¶

When the workflow execution reaches completion, a notification is sent by email.

- In the Dashboard, access the ClimGrid Statistics or the ClimGrid Timeseries component,

select the execution instance and visualise the generated data (Figure 11).

Figure 11 FMI ClimGrid Timeseries and Statistics: Dashboard Components¶

These two dashboard components are described in sections ClimGrid Time Series

and ClimGrid Statistics.

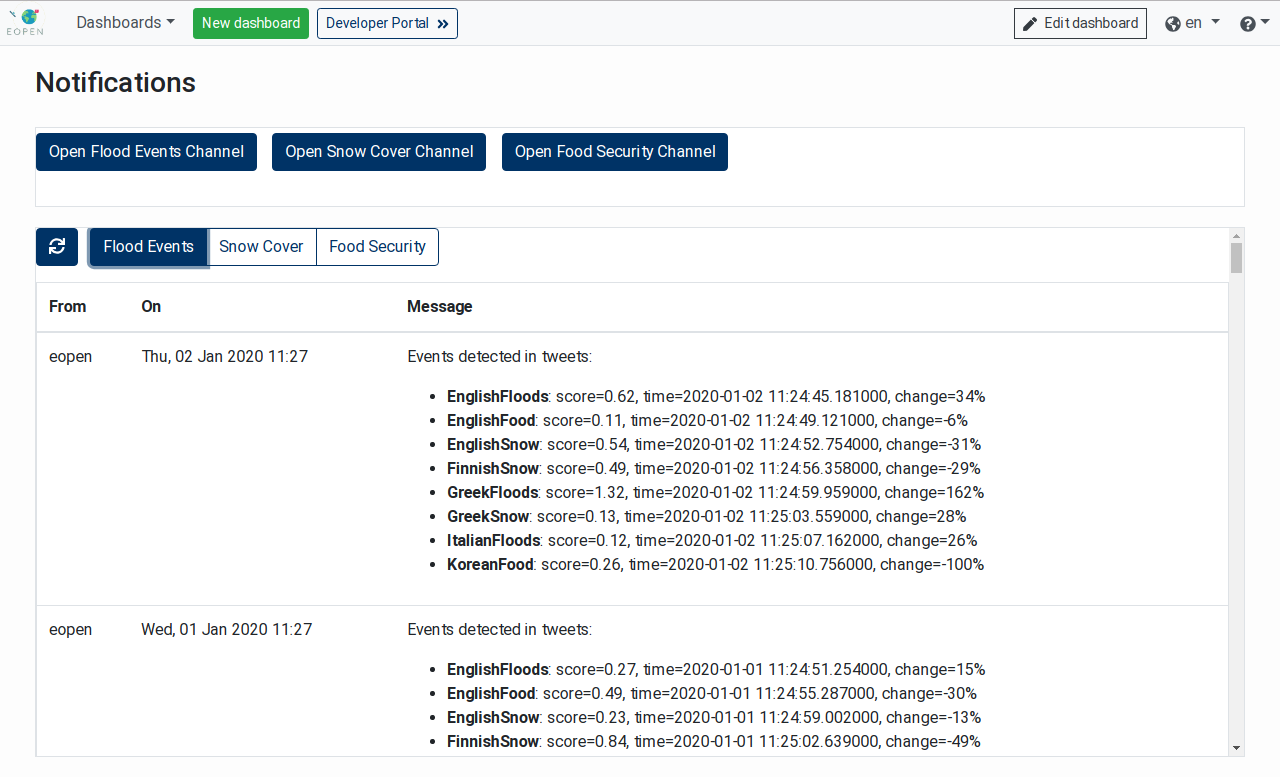

Notifications and Instant Messaging¶

The Notifications Dashboard contains the controls and components that allow users receive the notifications issued by the applications running in the EOPEN Platform. The integrated instant messaging system is the open‑source (Team Edition) of Mattermost (https://mattermost.com).

As three Pilot Use Cases (PUCs) are implemented in EOPEN, three dedicated Mattermost channels have been pre‑configured and may be used by the application developers to issue notifications from within their workflows.

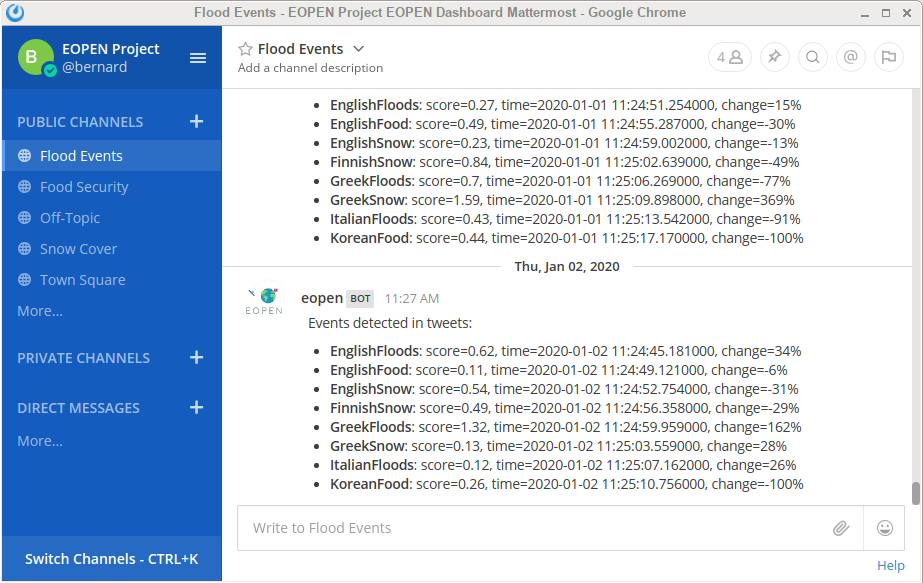

The page, represented in Figure 13, contains at the top three buttons which allow opening the Mattermost Web Client in a separate window. Each of the three buttons opens Mattermost on a specific PUC channel (see Figure 14).

The Mattermost Web Client is however fully featured. It thus allows selecting and reading from any PUC channel, searching for notifications, and issuing new notifications.

The Notifications Dashboard also contains an integrated Mattermost message viewer. It is currently rudimentary and does not automatically refresh the content nor allows sending new notifications. The message viewer shows three buttons for selecting the PUC channel of interest and a “Refresh” button (double‑rotating arrows icon, on the left) for refreshing the content of the viewer.

Figure 13 shows the notifications issued by the Events Detection in Tweets Data service which is scheduled to run once a day:

Figure 13 Notifications in the EOPEN User Portal¶

Figure 14 shows the same notifications displayed in the Mattermost Web Client:

Figure 14 Notifications in the Mattermost Web Client¶

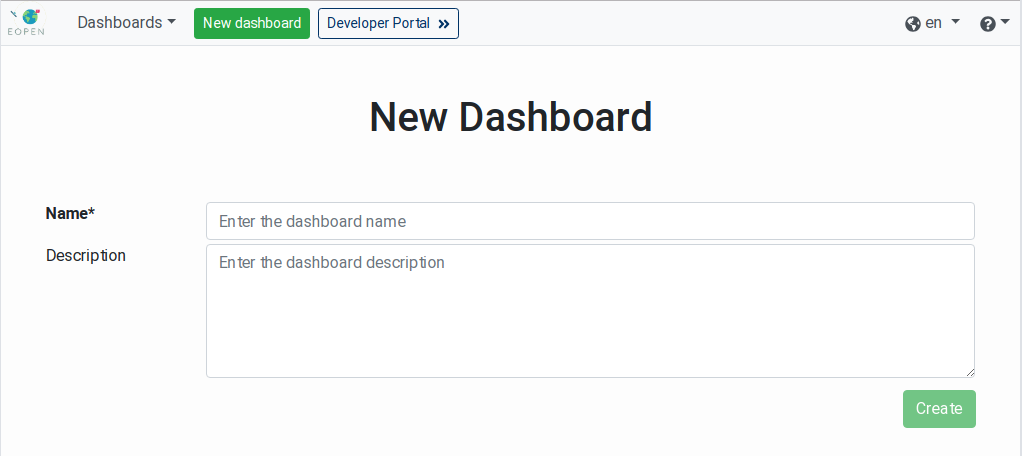

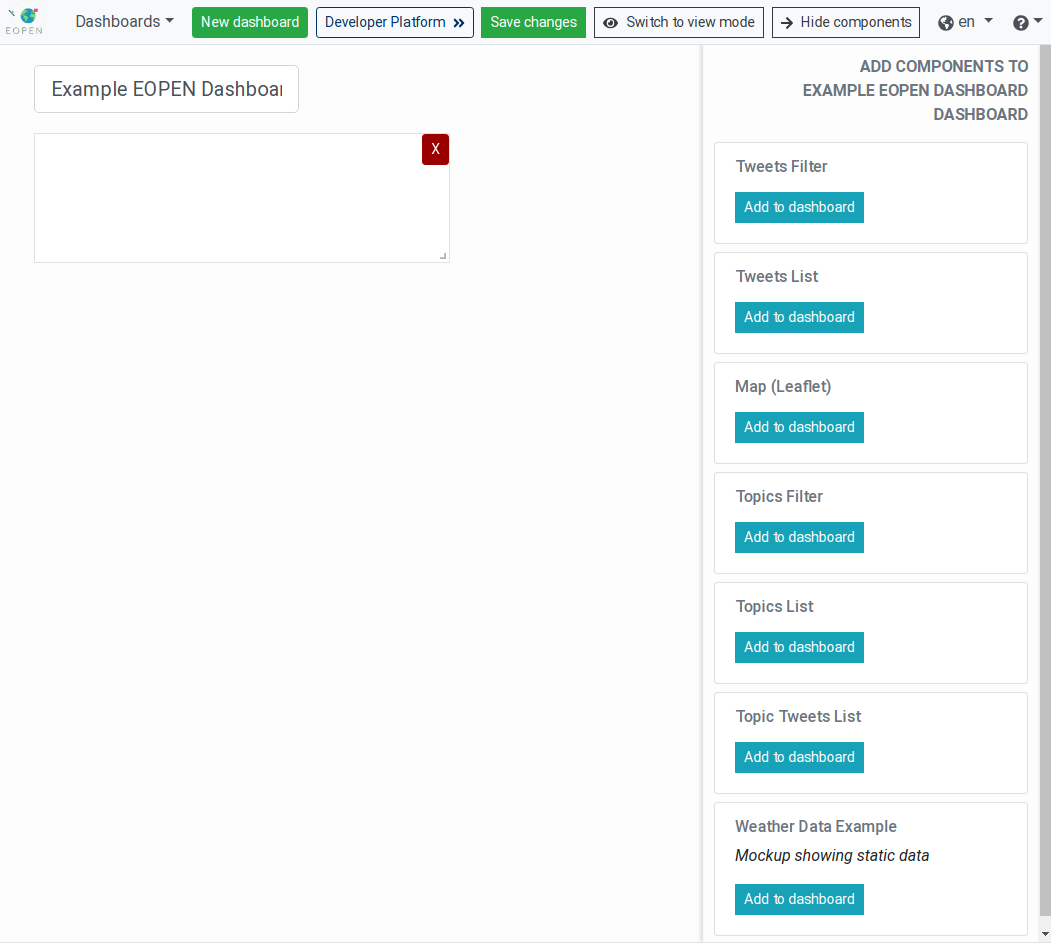

Creating Custom Dashboards¶

As indicated above in the site map section, users may create their own dashboards and integrate in them any combination of web component already available in the platform.

To create a new dashboard, click on the New dashboard button located in the page banner. A new page, shown on Figure 15, is displayed asking for the name and the description of the new dashboard. The name will be used to refer to the dashboard in the Dashboards menu.

Figure 15 Creation of a New Dashboard¶

To cancel the creation of the dashboard, simply navigate to another page of the EOPEN User Portal. Otherwise, enter the dashboard name and description (optional) then click on the Create button.

The new dashboard is displayed. This contains an empty web component. The banner shows a new button labelled Edit dashboard and the “Dashboards” menu now includes the new dashboard:

Click on the Edit dashboard button to enter the editing mode:

Figure 17 New Dashboard in Editing Mode¶

In editing mode, the page displays the following new elements in the banner:

A Save changes button which, when clicked, stores the new dashboard configuration on the server and quits the editing mode.

A Switch to view mode button which allows cancelling the changes and exiting the editing mode. When this button is clicked, a popup dialog box is displayed warning that all the modifications will be discarded and asking for confirmation.

A Hide components button for hiding the components panel (described below) while remaining in the editing mode. This is particularly useful to obtain a preview of the dashboard in normal mode.

The “components panel” located on the right side of the page lists the

web components currently available in the platform. Each of these is

individually described in Processes Management, below. Click

on a component Add to dashboard button to add a new instance of the

component into the dashboard.

On the dashboards, each component instance shows two controls:

An X in a red button located in its top‑right corner: Click on this button to remove the component instance from the dashboard.

An angle icon located in its bottom‑right corner. Click and drag this icon to resize the component.

To re‑arrange the components click in its center area and drag it around. A component will always be pushed upwards until it reaches the top of the dashboard or encounters another component.

Note: Some of the web components present in the list may already be included in one or more of the System Dashboards. It is for example the case of the Tweets Filter and the Tweets List. This does not prevent using them in other custom dashboards.

As indicated above, when the configuration of the new dashboard is finished, click on the Save changes button to store the configuration and exit the editing mode.

From now on, the custom dashboard may be re‑opened by selecting its name in the “Dashboards” menu located in the banner.

Modifying Custom Dashboards¶

A custom dashboard created as described in the previous chapter may be modified at any time by selecting it and then clicking on the Edit dashboard button located in the page banner.

The custom dashboard then enters the editing mode. Modify the dashboard as described above then click on the Save changes button to store the new configuration.

Available Graphical Components¶

This section introduces each available graphical (web) component.

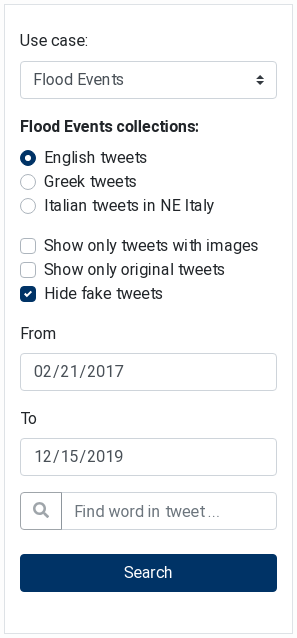

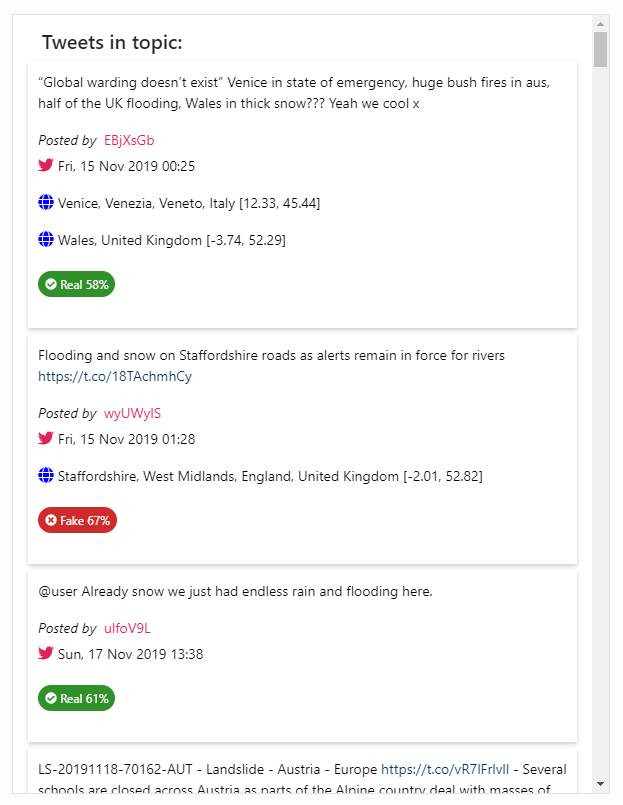

Tweets Filter¶

The Tweets Filter component (see Figure 18) is used to filter collected tweets (stored in the EOPEN Platform) based on various search criteria. It is meant to be used jointly with the Tweets List component as all the tweets selected using this filter will be automatically displayed in the list.

Note: This component is present in the Social Media system dashboard

(see Social Media (Tweets) Viewer).

The tweets are filtered based on the pilot use case, their language, a date range and optionally on a word that must appear in the tweet message.

In addition, switches allow filtering out the tweets that do not include an image, have been re‑tweeted (i.e. they are not original), or which are considered as fake by the integrated fake tweets detector.

Clicking on either the start or the end date reveals a calendar in which a new date may be selected.

Click on the “Search” button to submit the filter and update the tweets in the Tweets List component described in the following section.

Figure 18 Tweets Filter showing search criteria¶

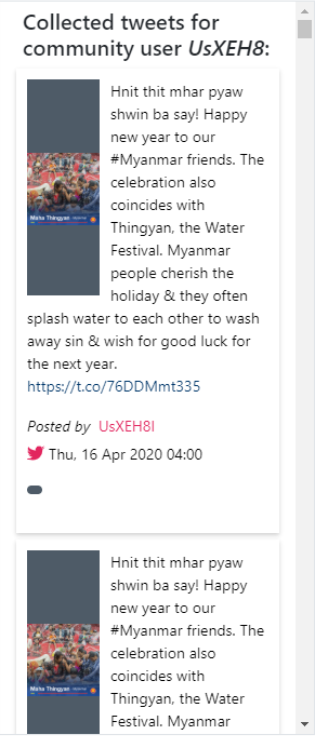

Tweets List¶

The Tweets List component (see Figure 19) displays tweets fetched from the EOPEN Platform. When combined with the Tweets Filter component, it displays the tweets matching the selection criteria.

Note: This component is present in the Social Media system dashboard

(see Social Media (Tweets) Viewer).

The Tweets List component displays the tweets in a paginated list. Controls located at the top allow selecting the size of each page (between 50 and 500 entries) and navigating between the pages.

A switch allows visualising the tweets on the Map (Leaflet) component if this is included in the same dashboard.

Figure 19 Tweets List showing matching tweets¶

Map (Leaflet)¶

The Map (Leaflet) component displays an interactive map (implemented with the Leaflet JavaScript library). It may be used to display simple features on a map.

Currently, only the Tweets List component makes use of the Map (Leaflet) component to display markers corresponding to the tweets estimated location. Clicking on a marker reveals the tweet message in a popup box.

Note: This component is present in the Social Media system dashboard

(see Social Media (Tweets) Viewer).

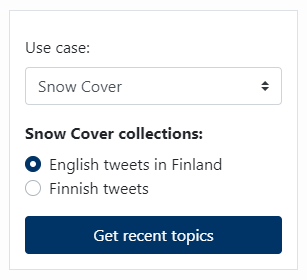

Topics Filter¶

Topics here are defined as groups of social media posts (i.e. tweets) that refer to a specific incident, subject, etc. and are produced by text clustering techniques. The Topics Filter component is used to select on which tweets collection topic detection will be performed and it is meant to be used jointly with the Topics List and Topic Tweets List components.

Topics Filter is a simple form where the user is able to first select the use case of interest and then the collection of interest. By clicking the Get recent topics button, a service searches for trending topics in the 400 most recent tweets of the selected collection and the detected topics are displayed on the Topics List component.

Figure 20 Topics Filter¶

Note: Search for topics in Korean tweets about food security is omitted due to the difficulty to handle their special characters.

Topics List¶

The Topics List component visualizes the trending topics in the tweets that are collected for the pilot use cases of EOPEN. When the search for topics, which can be triggered by the Topics Filter component, is completed, each of the detected clusters is presented as a word cloud, i.e. an illustration of the most mentioned terms per topic, where their frequency is shown with font size. Every time a word cloud is clicked, the complete set of tweets that are contained in the corresponding topic appear in the Topic Tweets List component.

Figure 21 Topics List¶

Topic Tweets List¶

The Topic Tweets List component resembles the Tweets

List component (see Tweets List)

but contains only the posts of a specific topic. When a word cloud from

the Topics List component

(Topics List) is clicked, then the

Topic Tweets List is refreshed to display the tweets that the selected

topic comprises.

Figure 22 Topic Tweets List¶

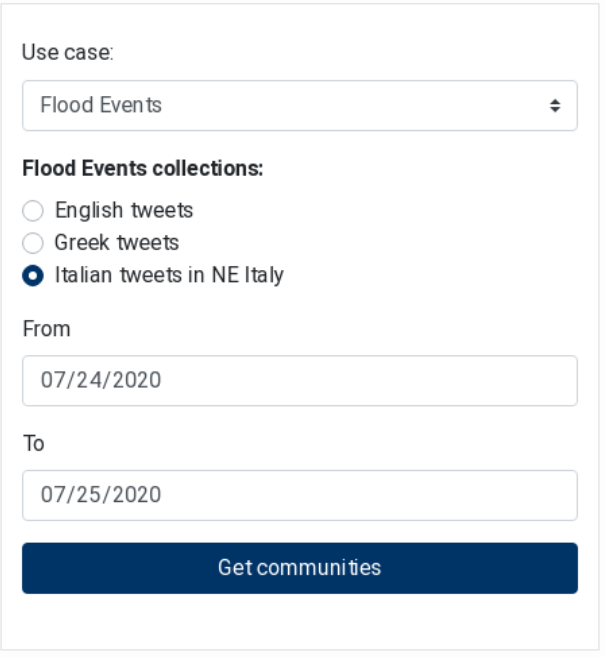

Communities Filters¶

Community detection refers to the discovery of online communities of social media users, i.e. Twitter accounts that are interlinked by mentioning each other, while key-player identification is the discovery of most influential users in the above communities. The Communities Filters component is used to select the tweets collection the community and key-player detection will be applied on and is meant to be used jointly with the Communities Graph, Top Key-Player List and User Tweets components.

Communities Filters (Figure 23) is a form where the user is able to define the collection of interest by selecting the use case from a dropdown box and the language from radio buttons, while the time period can be defined by selecting a “from” date and a “to” date. By clicking the

“Get communities” button, the community detection service is called with the selected options as input parameters.

Figure 23 Communities Filters¶

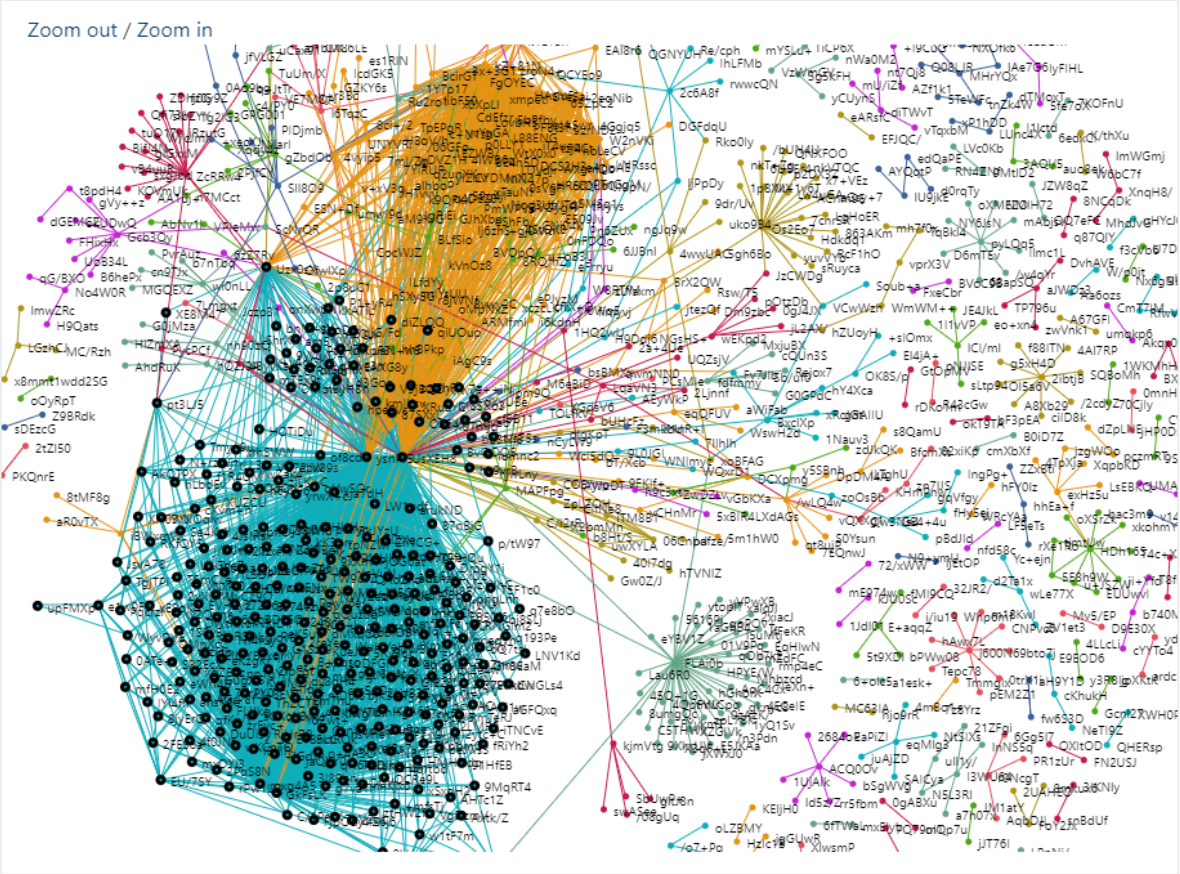

Communities Graph¶

The results of the detection service are used to visualize in the Communities Graph component (Figure 24) the social network of Twitter accounts that are connected via mentioning each other. The unique users found in the pairs are displayed as nodes, pairing is displayed as edges between the two users/nodes, and communities are expressed as different colours of the nodes and edges. The IDs of the users are pseudonymised for privacy reasons. The graph can be zoomed in and out, to take a closer look at the detected communities.

Figure 24 Communities Graph¶

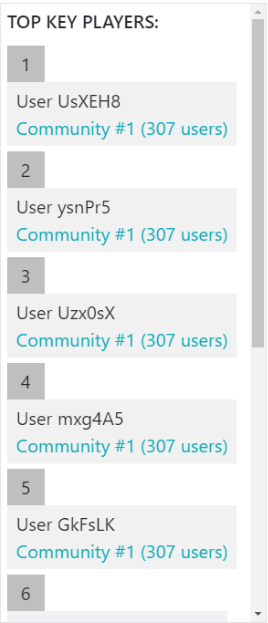

Top Key-Player List¶

The community detection service also returns the top ten key-players that have central position in the communities and are considered influential to the other users on Twitter. The sorted list is displayed in the Top Key-Player List component (Figure 25), where the pseudonymized ID of each key-player and the community they belong to are shown. The community is mentioned by its ID, along with the number of users it comprises, and is also coloured with the same colour of the related nodes. When clicking on the coloured text, the nodes of the community are highlighted in the graph (see the bottom left community in Figure 24) to indicate the position of the community. For the visualisation of each key-player in the list, the Top Key-Player Card template is used.

Figure 25 Top Key Players List¶

User Tweets List¶

When a node in the graph of Figure Figure 24 is clicked, then the tweets that have been posted by the respective user are displayed in the User Tweets List component (Figure 26). The Tweet Card template, already implemented from other social media-related components, is reused to show each single tweet in the list.

Figure 26 User Tweets List¶

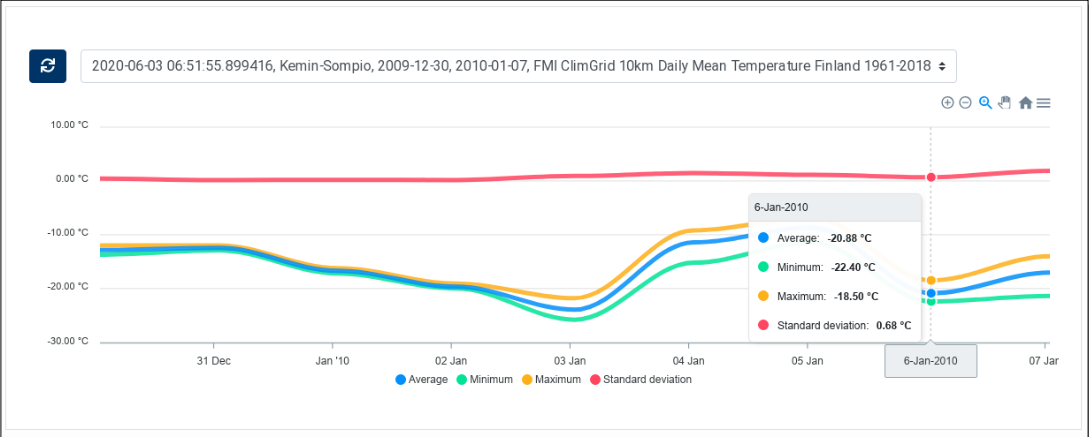

ClimGrid Time Series¶

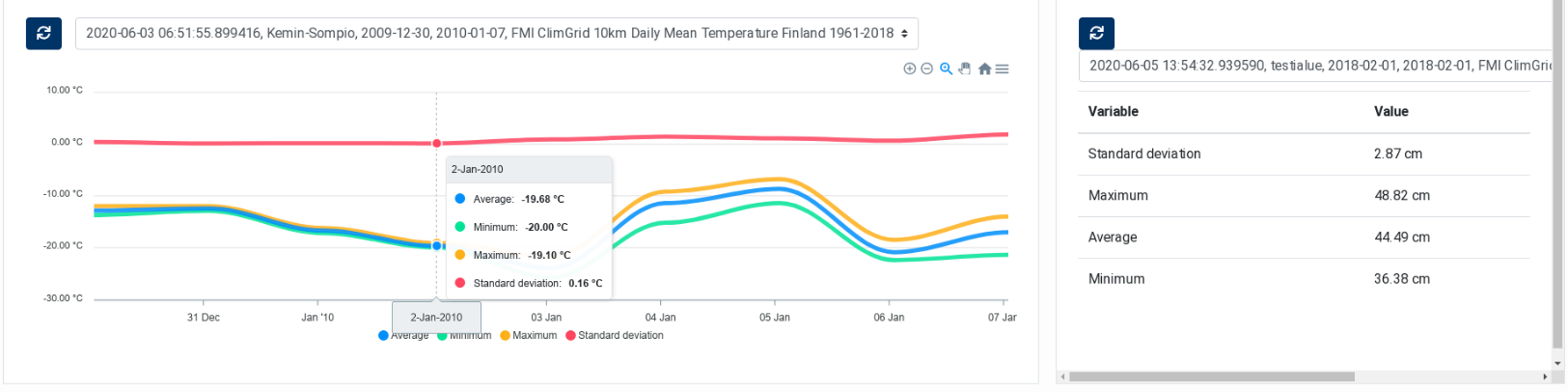

The ClimGrid Time Series component is used to visualise the timeseries data extracted

from the ClimGrid products provided by FMI. As described in GIS Viewer, above,

the information extraction is triggered via an extension implemented in the GIS Viewer OpenSphere.

The inputs to be provided are the area of interest (AOI) (which is given a name), a date range,

and the ClimGrid product to be processed.

After the processing, the result is made available via a drop-down list included at the top of the component. Each entry in the list indicates when the processing has taken p lace (date and time, the name of the AOI, the date range, and the name of the ClimGrid product. Click on the icon at the left of the list to reload the values from the server.

Select an entry in the list to display the corresponding timeseries in the chart. Four curves are displayed, corresponding to the average, the minimum, the maximum and the standard deviation of the values within the AOI.

The legend, below the chart allows to display or hide each of the curves. Controls in the top right corner allow zooming and panning in the chart as well as exporting the chart in SVG and PNG formats. Click on the house icon to re-centre the chart and reset the zoom level.

Figure 27 ClimGrid Time Series¶

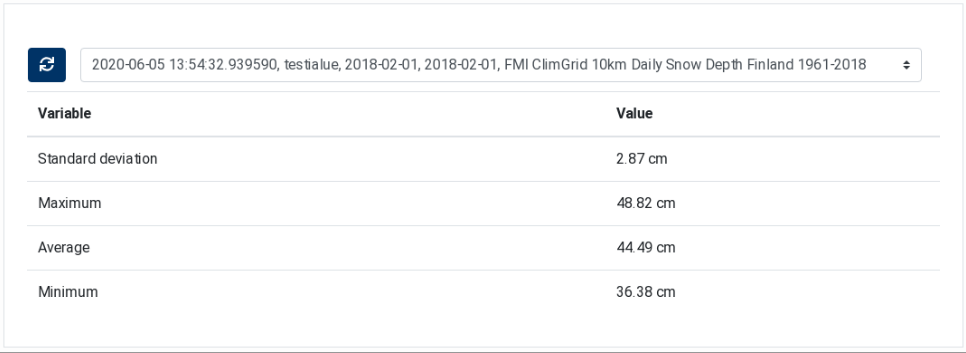

ClimGrid Statistics¶

The ClimGrid Statistics component is similar to the ClimGrid Time Series component. It is also used to display data extracted from FMI ClimGrid products. The difference is that this component displays in a table statistical information extracted from the product on a specific day. As the other component, it contains two parts: a drop‑down list at the top, for selecting the processing execution, and a table that displays the extracted statistics.

Figure 28 ClimGrid Statistics¶

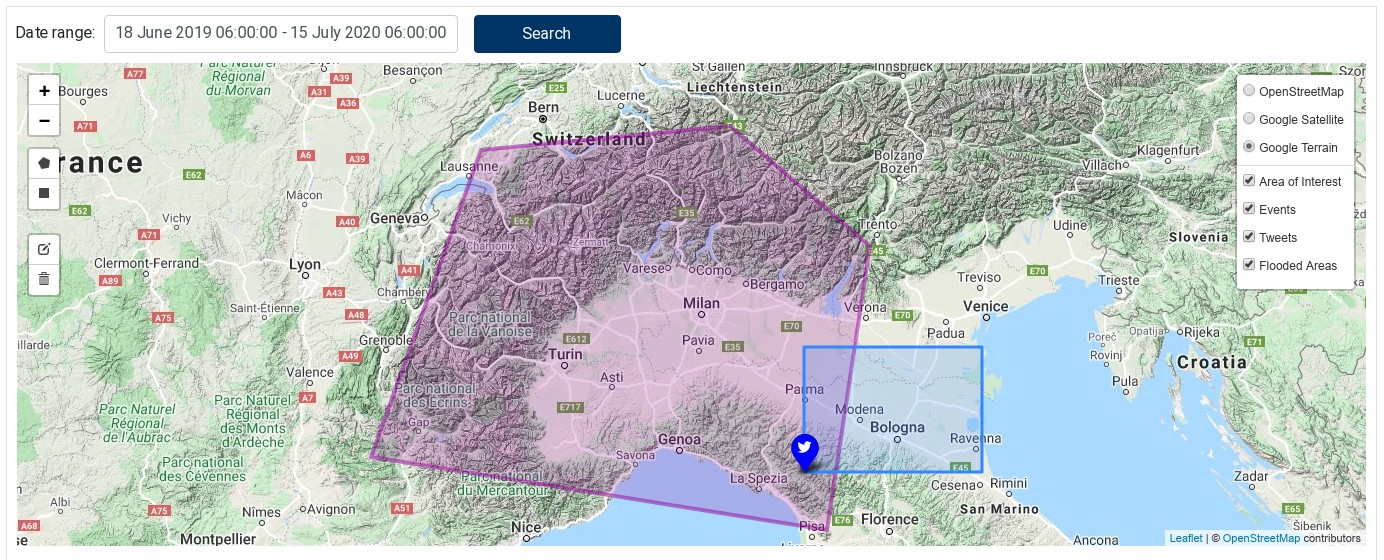

Geo‑localised Linked Data¶

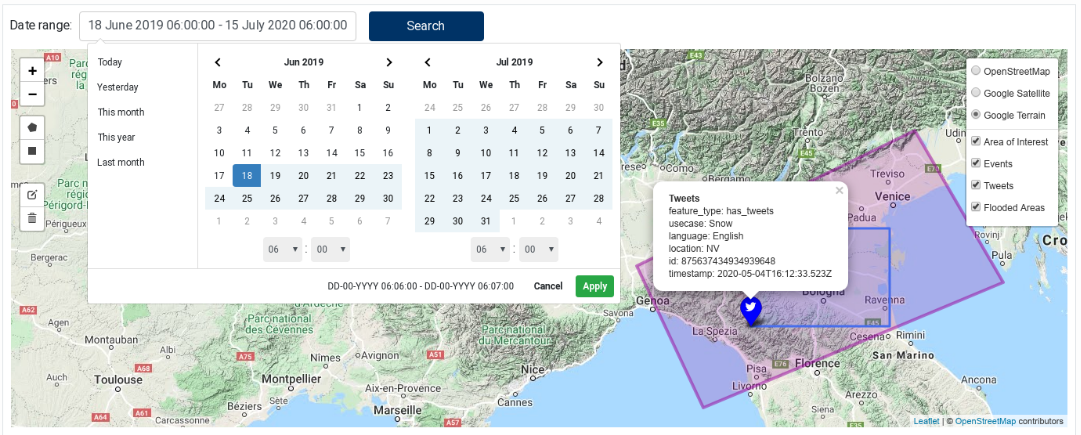

The Geo‑localised Linked Data component allows querying the EOPEN backend for Linked Data using an area of interest (AOI) and a time of interest (TOI), and to display the results on an interactive map.

The component contains a small form to select a date range at the top and a map to draw/edit the AOI and visualise the data. To draw a rectangle or a polygon on the map, select the corresponding icon in the left controls. Figure 29 shows the component with a selected AOI and the matching features (events, tweets and flooded areas). Figure 30 shows the date range selecting as well as tweet properties.

The component lets you draw only one shape, which appears in pink colour on the map. If you draw a new one, the previous one is removed. An existing AOI can be edited: use the “pencil” icon to enter the editing mode and click on the “save” button when done. Use trash icon to delete the AOI shape.

Click on the “Search …” button to search for data. This button is enabled only if a valid AOI and TOI are selected.

If the result list is empty, the text “No result” is shown next to the search button.

Results are displayed on the map and one layer is created for each feature type (flooded areas, tweets, events, etc.)

Using the control on the right side, you can choose the base map and the layers to be displayed or hidden.

The “Area of interest” layer contains the rectangle or polygon of the AOI, which may also be hidden.

Click on a feature on the map to display its properties in a popup.

Figure 29 Geo-localised Linked Data showing a selected AOI and the matching features¶

Figure 30 Geo-localised Linked Data showing the date range selection tool and tweet properties¶

EOPEN Developer Guide¶

Abstract

This document is the Application Developer Guide of the EOPEN Platform. Its purpose is to describe the various features supported by the EOPEN Platform and made accessible to the authenticated developer users through a standard Web browser. Each feature is described individually in a dedicated section.

Guidelines are also included to help developers create algorithms and applications.

Annexes include a glossary of the main terms as well as an inventory of the EOPEN modules available in the EOPEN Platform (D6.5).

The content of this document is part of the result of the work performed on T6.2 “EOPEN System Integration”.

Version |

Date |

Reason |

Revised by |

Approved by |

|---|---|---|---|---|

3/5-Aug-2020 |

Internal review |

Gilles Lavaux, Valentina Faccin, Gabriella Scarpino (Serco) |

||

1.0 |

19-Aug-2020 |

First release |

Authors |

Leslie Gale |

Organisation |

Name |

Contact |

|---|---|---|

SpaceApps |

Bernard Valentin |

|

SpaceApps |

Hakim Boulahya |

|

SpaceApps |

Leslie Gale |

|

CERTH |

Stelios Andreadis |

This document is the Developer Guide of the EOPEN Platform. Its purpose is to describe the various features supported by the EOPEN Platform and made accessible to the authenticated developer users, either through a standard Web browser, or an FTP client.

To access the EOPEN Developer Portal navigate to the home page of the EOPEN Platform (https://eopen.spaceapplications.com) and click on the Click here to access the Developer Portal button:

Figure 31 EOPEN Portal Home Page (Fragment)¶

Note: Credentials for authenticating in the EOPEN Developer Portal are created upon request.

The document describes each individual feature in a dedicated section. An end-to-end scenario is also included which indicates the logical sequencing of the usage of the features, from the off-line preparation of custom algorithms to the execution of workflows and the retrieval of the generated outputs.

This document focuses on the interfaces and the features available to developer users, not platform administrators.

Document Structure:

Chapter %sintroduces the EOPEN Application Lifecycle and describes a typical end-to-end usage scenario that covers all the steps that must be executed to develop a custom algorithm, import the algorithm in the Platform and integrate it in a workflow, execute the workflow and access the results.Chapter %sprovides a detailed description of each feature implemented in the Platform.Section %sincludes a site map which depicts how each feature is accessed in the Web interface and how the user may navigate between them.Appendix %sprovides the definition of the terms and concepts used in the EOPEN Platform.Appendix %scontains the inventory of the EOPEN modules integrated in the First EOPEN Prototype. Concretely, modules may have been implemented as processes, services or workflows, depending on their needs.Appendix %sinstructs on how SNAP graphs designed, tested and exported using the SNAP Graph Builder tool (integrated in the SNAP Desktop application) must be transformed into parameterised graphs that may be executed using the command-line Graph Processing Tool (GPT). This operation is necessary to allow deploying and executing SNAP graphs within the Platform.Algorithms not implemented in Python must be executed similarly, that is using a Python script to trigger the execution of a process using a system call. This applies for example to R, Java, and binaries (e.g. compiled C/C++ and Go).

Appendix %sgives example algorithms implemented in Python 3 that permit to execute various processes in the EOPEN Platform.

Main changes against the EOPEN Integrated Prototype Platforms:

The Processor and Workflow concepts have been merged in favour of Workflow. This removes the ambiguity that existed between a resource type (Processor) and its definition (Workflow).

A user who creates a Process or a Workflow is automatically registered as its owner. By default, Processes and Workflows are only visible and may only be managed by their owner.

The Workspace and user Role concepts have been introduced to allow sharing resources, including Processes and Workflows. The fundamental rule is that a particular resource is only visible by the users who are given a role in one of the workspace the resource belongs to. To share a resource, a user who has the right to do so assigns that resource to one or more workspaces.

The management of the users and their roles in the workspaces is under the responsibility of the platform administrators. This is thus not covered by the current document as it focuses on the features provided to the process and workflow developers.

Migration of the Python 2 based modules to Python 3.

Added support for GPU-enabled Worker Nodes, which may be explicitly selected for running processes that can benefit from a GPU (such as the Basement simulation application).

Workflow engine (Airflow) optimisation to mitigate the delays due to the orchestration mechanism.

The EOPEN Platform is based on the ASB Framework. This documentation describes features available

in version v1.1.3 of the ASB Framework. The version of the framework can be accessed in the

settings page, described in User Settings.

AOI |

Area of Interest |

API |

Application Programming Interface |

ASB |

Automated Service Builder |

EO |

Earth Observation |

EOPEN |

opEn interOperable Platform for unified access and analysis of Earth observatioN data |

ESA |

European Space Agency |

FSM |

Full Strip-Map |

FTP |

File Transfer Protocol |

GDAL |

Geospatial Data Abstraction Library |

GPT |

Graph Processing Tool |

GUI |

Graphical User Interface |

HTTP |

Hyper-Text Transfer Protocol |

JSON |

JavaScript Object Notation |

OGC |

Open Geospatial Consortium |

S1, S2, S3 |

Sentinel‑1, Sentinel‑2, Sentinel‑3 |

SNAP |

Sentinel Application Platform |

SpaceApps |

Space Applications Services |

VM |

Virtual Machine |

WFS |

Web Feature Service |

WMS |

Web Map Service |

WPS |

Web Processing Service |

Introduction¶

The EOPEN Platform is a tailored and customized version of the ASB framework. Available features include the possibility to upload custom algorithms, to integrate these algorithms within new workflows, and to run the workflows in a distributed environment.

This chapter introduces the successive steps to be followed from the local offline development of an algorithm to the retrieval of the generated outputs from the Cloud environment.

The EOPEN Application Lifecycle¶

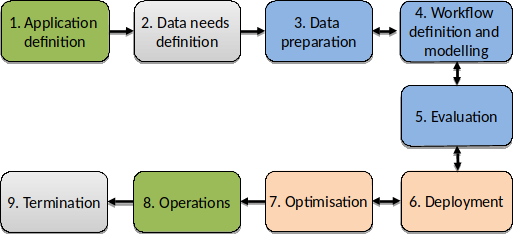

Initially defined as the use case life cycle in the proposal, the application lifecycle depicts the steps to create an operational application (service). It comprises the following steps:

Figure 32 EOPEN Application Lifecycle¶

Steps 1 to 5 are performed to establish the concept and validate the models and verify the concept achieves the goals of the application. With respect to the EOPEN platform these are most often performed offline to EOPEN with the developers working on PCs and local servers using example data sets. These activities can however influence the eventual deployment of the concept to create an operational service. For instance the data may only be available on certain platforms, or by choice processing should be performed on a platform where the data is hosted to avoid excessive transfers of data.

Once the concept is verified and validated Steps 4 to 6 are performed using the EOPEN Platform. In general an application will comprise one or more algorithms organised in a workflow. The developer imports the algorithms into the EOPEN platform and using the designed workflow configures the imported algorithms using the workflow editor.

Some terminology is unavoidable. To avoid discussions on what is an application, what is a process …, the following terminology is used:

Process – A Process is a Dockerised Algorithm. A Process definition includes a name, description, and version, as well as (typed) input and output parameters, and software and hardware dependencies. The typed inputs and outputs allow ensuring the compatibility of the parameters inter‑connected in the workflows. The EOPEN Developer Portal comes with a Process Import Tool that automates the packaging and the registration of custom processes in the system.

Workflow – A Workflow implements a application by specifying the processes that must be executed together with their inter‑connections (data flow). The EOPEN Developer Portal includes a graphical Workflow Editor for creating workflows interactively, e.g. selecting the appropriate processes and connecting parameters with drag‑&‑drop. Workflows may be executed on-demand, scheduled or externally triggered. The platform automatically generates the parameterization forms that allow giving values to unconnected input parameters. EOPEN includes interfaces for monitoring and control, reporting and data access.

Additional concept definitions are provided in Appendix %s of this document.

Step 6, Deployment, is fully automated.

Initially Step 7, Optimisation, is not performed. It may also not be necessary prior to transferring to operation if no performance issue is detected. However should performance be an issue, or a shift to HPC is considered for instance then some re-organisation of the workflow may be needed. Another example is the use of SNAP. Experience shows that by breaking down a SNAP graph into sub-graphs leads to a substantial increase in performance. A developer may choose to do this right from the start, or after confirming the application is performing as expected.

Step 8, Operations, is in most cases performed in multiple stages. The first execution of the user application, execution of the Workflow, will be performed to reverify and revalidate the application. Once this is completed then the application is ready for operations.

Step 9, Termination, is performed to end the use of the application with the eventual off-line archiving of the results (output products). In the case of an EOPEN application this will follow the principles set out in the Data Management Plan.

End-to-End Usage Scenario¶

This section explains the steps that an application developer follows to make an application operational on the EOPEN platform. To perform the tasks EOPEN provides a Web-based user interface which is described in details in the next chapter. The table below introduces each step and refers to the detailed descriptions.

Lifecycle Step |

EOPEN Platform |

Steps 1 to 5 – Concept Phase |

Application Concept Development The EOPEN platform does not restrict the application developer in their choice of programming language, toolbox or libraries. Examples of the implementation can be a Python script using the GDAL for processing satellite imagery which can be tested on a local machine, or a SNAP graph edited and executed using the Graph Builder included in the SNAP Desktop application. When the application is considered ready, it may be imported in the framework. |

Steps 4 to 8 – Pre-operational |

Importing the developed algorithms Developed algorithms must be

imported in the framework using

the Process Import Tool. This is

a three steps process, as further

described in

Configuring a new workflow Imported processes must be

integrated in the workflow of

pre‑existing or new workflows

before being executed. To do so,

the Workflow Editor is used to

create and edit workflows,

integrating and inter‑connecting

processes in a graphical manner

(see Conclusion of the pre‑operational phase is the execution of the workflow as described in Step 8 – Operational. |

Step 8 – Operational |

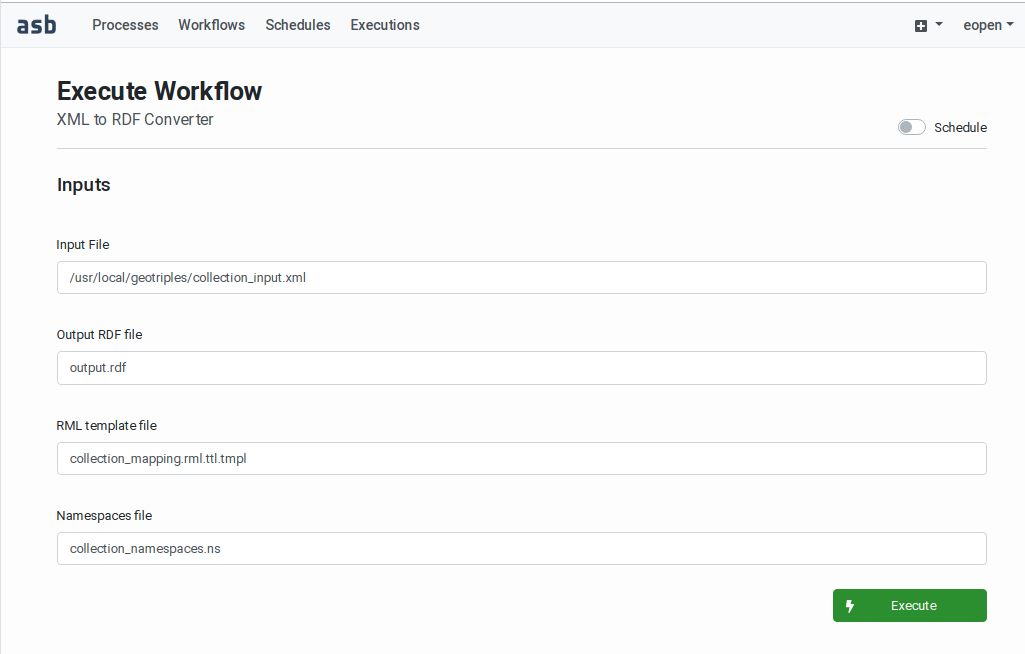

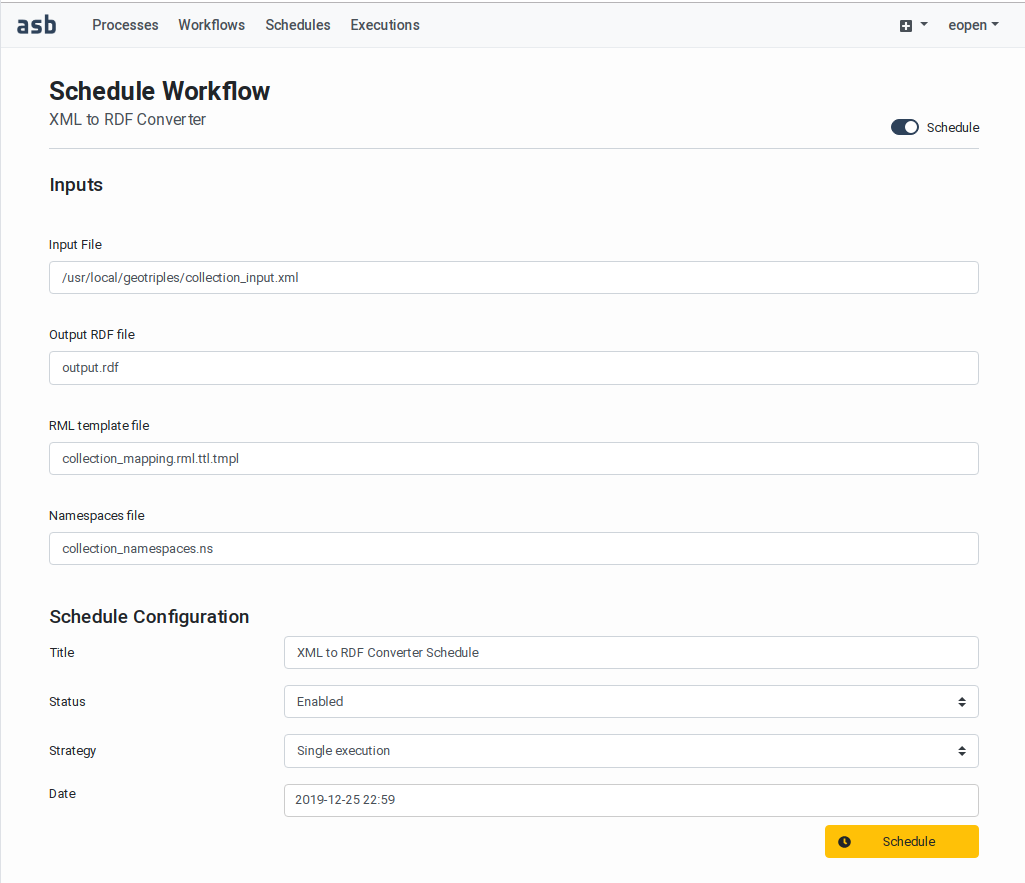

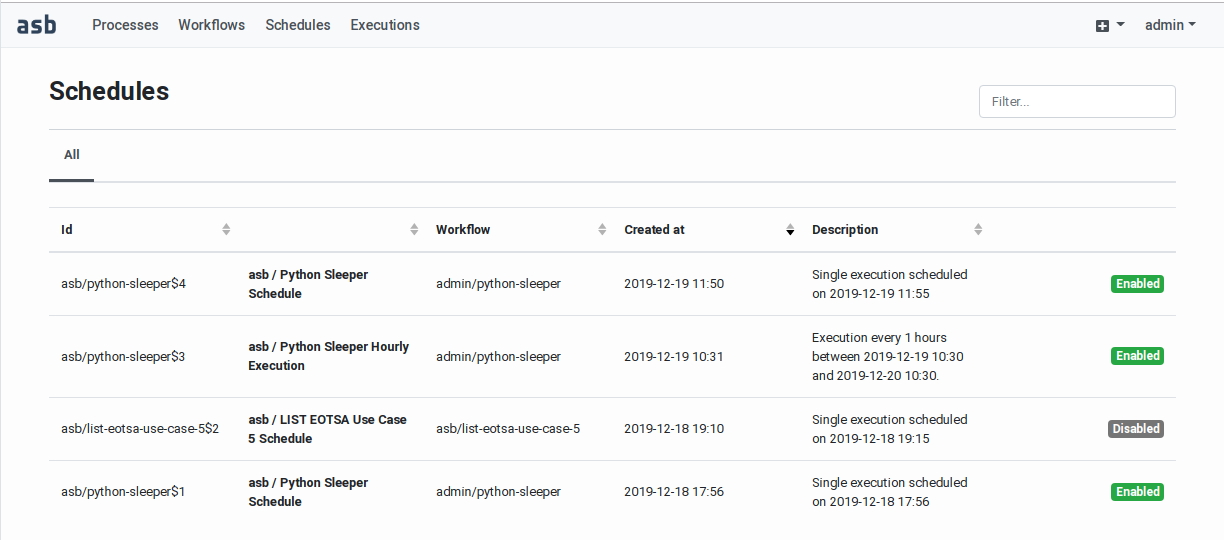

Executing the workflow A workflow may be executed

on‑demand, through the Service

web interface. If the workflow

has unconnected inputs, these are

used to automatically generate a

parameterization form, as

described in

Alternatively, the platform

proposes to schedule the deferred

execution of the selected

workflow. A form allows

configuring a single deferred

execution or multiple executions

triggered at regular interval or

on specific days in the month.

The scheduler pages and controls

are described in

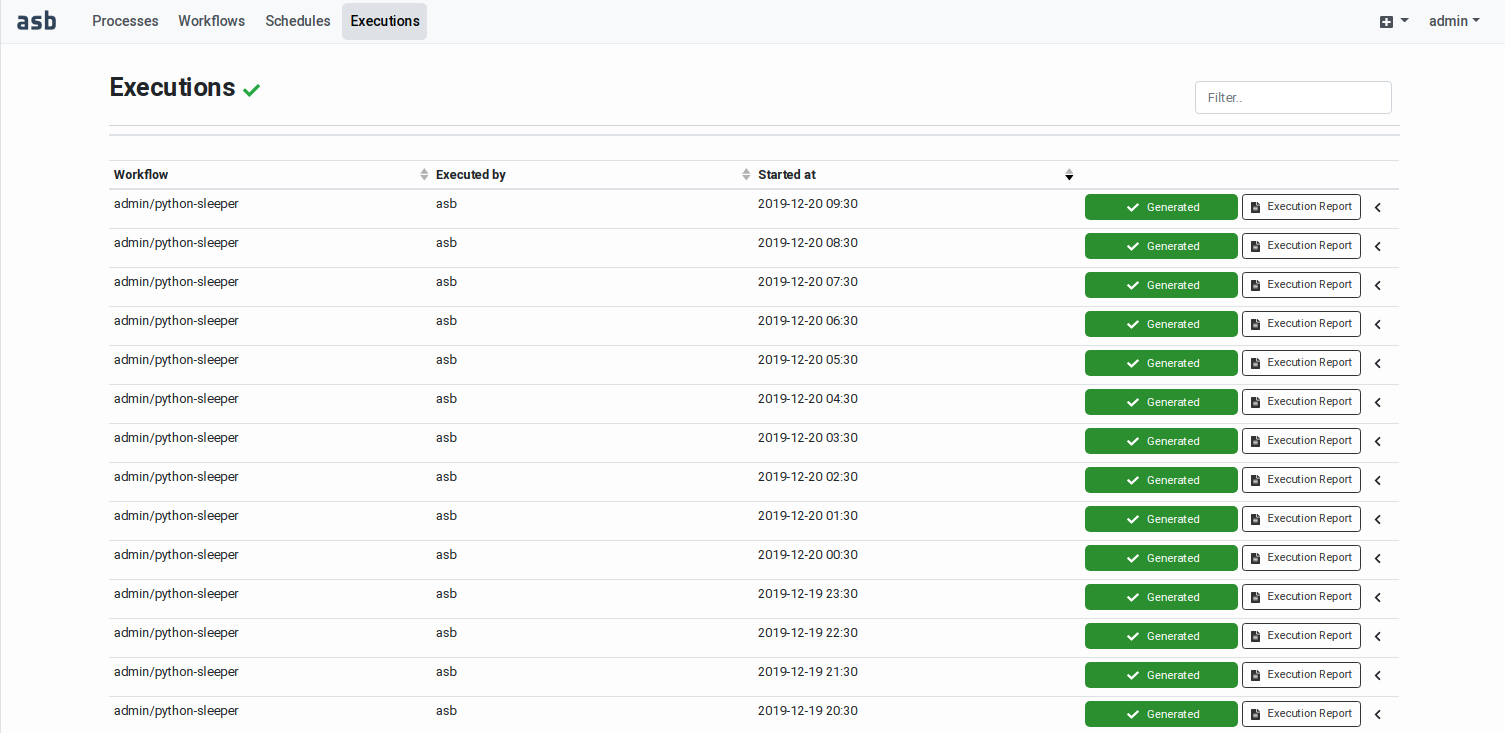

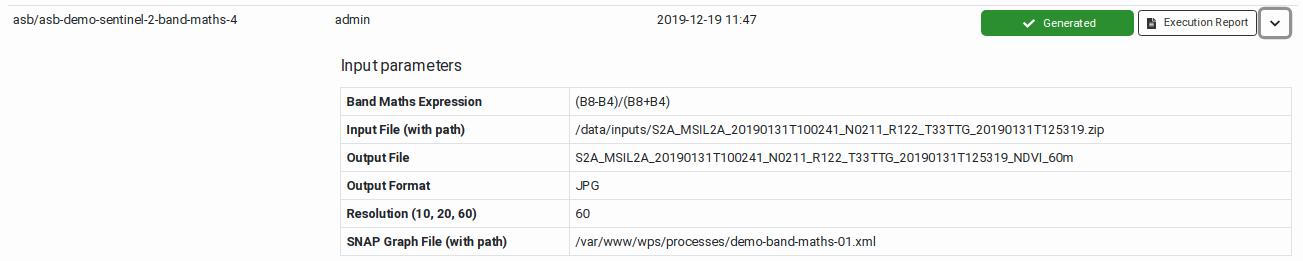

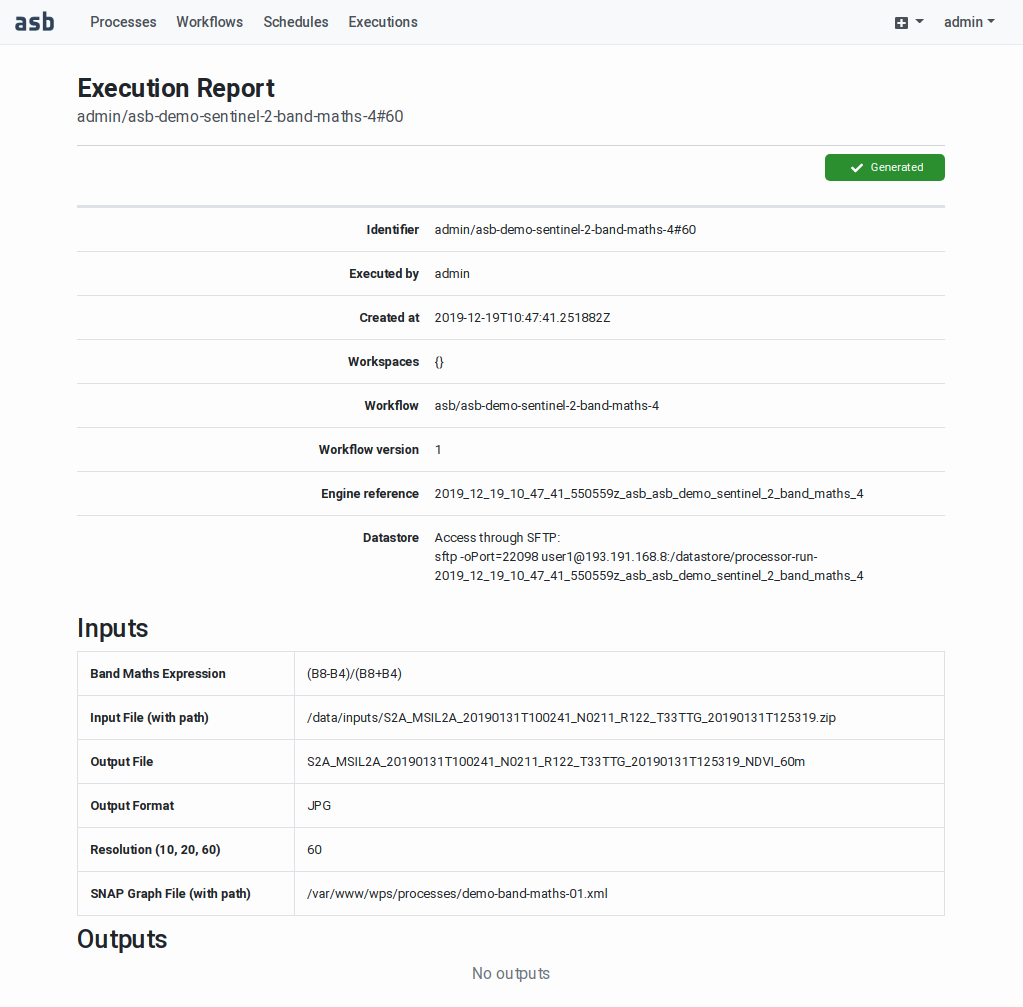

Monitoring the workflow execution and accessing the results Workflow executions are displayed

in two pages: the Recent

Executions page and the Execution

History page. The first page

displays the recent (started in

the last 24 hours) and the

on‑going executions. The second

page displays all the completed

executions without age limit. See

After the successful execution of

a workflow, an Execution Report

becomes available. This includes

the execution metadata, the

user-specified input parameters

(bounding box, time range, scene

identifiers, and other specific

input values), the list of

outputs and generated product

files, and if available

quicklooks of the output product.

The page also includes the

graphical representation of the

workflow graph and a Gantt chart

showing the times at which each

task in the workflow has been

executed. See

|

The EOPEN Developer Portal¶

This chapter describes the user interface of the EOPEN Platform for the application developers. Next section gives an overview of the available pages and the principal navigation links. The remaining of the chapter describes the pages, the operations and the related Platform behaviour.

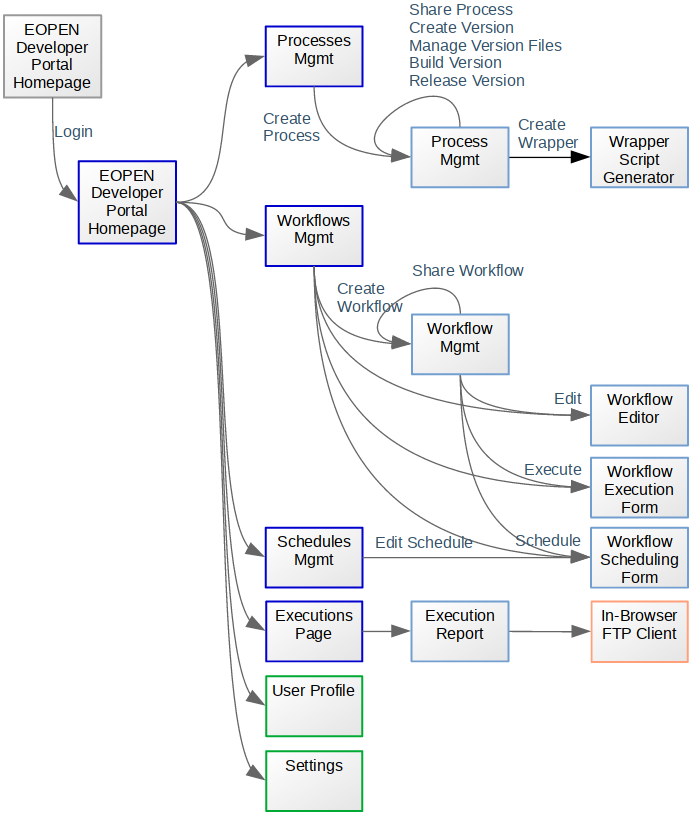

Site Map¶

Figure 33, below, shows the structure of the Platform user interface. Each box represents a Web page and the arrows show the navigation between the pages. Not all navigation links are represented, however. For example, logging out on any of these pages redirects the user to the EOPEN Developer Portal Home Page (grey box on the left).

Figure 33 EOPEN Developer Portal Site Map¶

Home Page¶

The EOPEN Developer Portal Home Page (https://eopen.spaceapplications.com) is the only page accessible to non‑authenticated users. The page body informs about the EOPEN project and the Automated Service Builder (ASB) framework on which the EOPEN Platform is built. It shows in its banner a link for logging‑in.

Figure 34 Home Page for Non‑Authenticated Users¶

Click on the Log in link to open the User Authentication Form.

User Authentication¶

The User Authentication Form is displayed when the Log in link located in the page banner is clicked. The form lets you enter your name and password. The Go back to the home page link allows aborting the login process and navigating back to the EOPEN Developer Portal Home Page.

Figure 35 User Authentication Form¶

After a successful authentication, the browser is redirected to the EOPEN User Portal. Click on the Developer Portal button to navigate back to the Developer Portal.

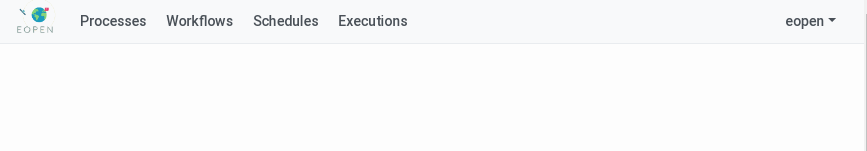

Header Panel¶

Once authenticated, the top part of the Web pages is extended with links and menus that give access to the different features of the platform:

Figure 36 Header Panel¶

The header panel provides the following elements:

Label |

Target |

Section |

The EOPEN icon |

EOPEN Developer Portal home page |

|

Processes |

Processes Management pages |

|

Workflows |

Workflows Management pages |

|

Schedules |

Schedules Management pages |

|

Executions |

Executions Inspection pages |

|

<user-name> |

User profile and settings menu |

|

User Configurations¶

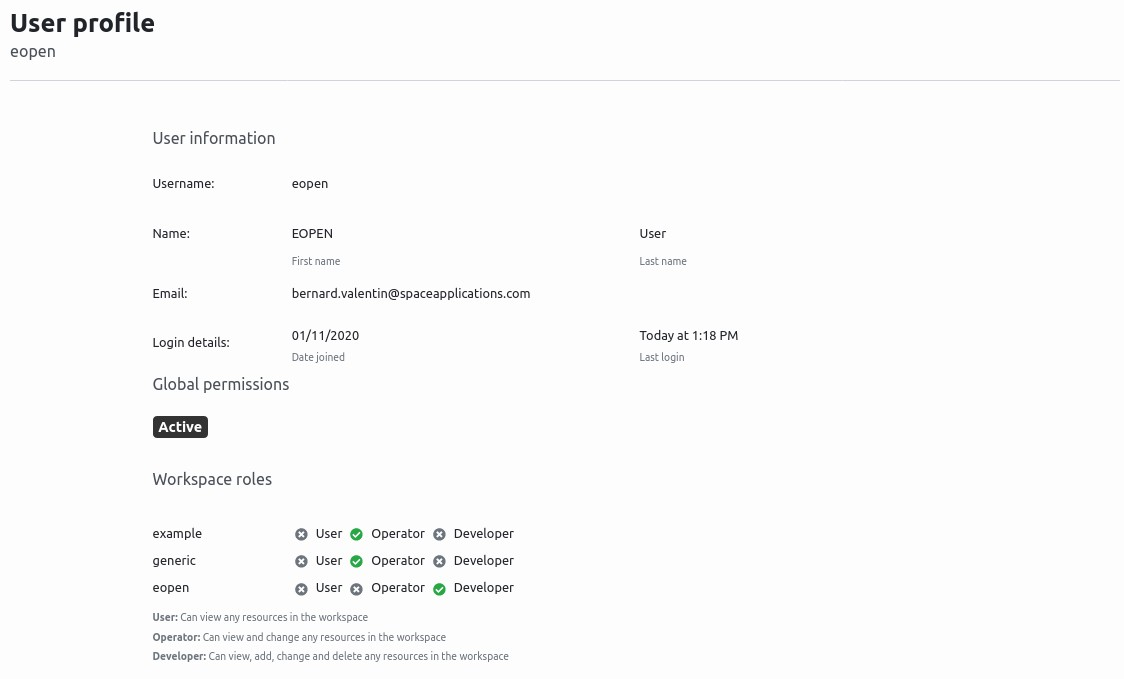

User Profile¶

The user profile page, shown in Figure 37, shows the user information as username, name, email, login details, permissions and roles.

Figure 37 User Profile Page¶

User Settings¶

User settings provide information on the platform and some configuration to the user. The settings page can be accessed by clicking on the username in the Header Panel, as shown in Figure 38.

Figure 38 Settings Dropdown Link¶

The settings page, shown in Figure 39, provides the name of the platform, the version and user settings.

Figure 39 Settings Page¶

Processes Management¶

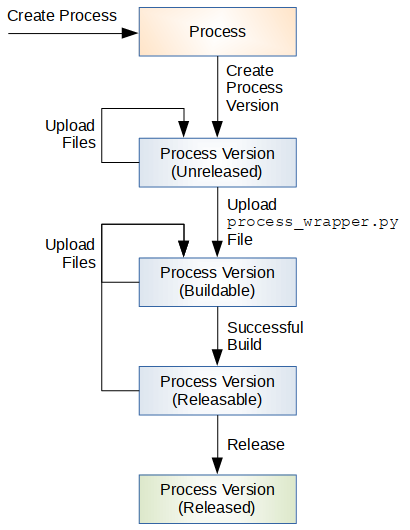

Process Development Lifecycle¶

A Process, and more specifically a Process Version, is a single unit of execution. Custom processes and versions may be added in the platform by the developers by executing the following steps, depicted on Figure 40:

Create a process in the platform (section

Creation of a New Process).This is an initialisation step required to declare the process and start working on it.

Create a new process version (section

Creation of a new Process Version).Initially, a process version has no implementation files. These must be uploaded in a following step. Concretely, it means the process version may not be built and released yet. Its status is “Unreleased”.

Generate and prepare a process wrapper script (section

Generation of a Process Wrapper Script).A process wrapper script acts as an adapter between the service interface that will allow executing the process remotely and the process implementation files. This step is not represented in Figure 40.

Upload the wrapper script and the process implementation files (section

Upload of the Process Version Implementation Files).This is an interactive step. Files can be added and overwritten until all the files required to implement the process have been uploaded. As long as no valid wrapper script (whose name must be process_wrapper.py) has been uploaded the status of the process version remains “Unreleased”. As soon as a valid wrapper script has been uploaded, the status changes to “Buildable”.

Note: Importing and testing processes in the EOPEN platform is not as straightforward as executing a Python script offline, on a local system. It is thus advised, in order to same time, to test new algorithms locally before importing them in the platform. Ideally the test should involve the wrapper script as well. This is further described in

Testing Processes Off‑line.Build the process version (section

Building the Process Version).At this step, the process version implementation files are packaged and tested and a dry‑run of import is performed. This step is important to determine if the uploaded files can be compiled and loaded to start serving a processing service.

If the build is not successful, new implementation files may be added or replaced before trying a new build.

If the build is successful, the status of the process version becomes “Releasable”. At this point, it is still possible to add or replace the implementation files.

Release the process version (section

Releasing a Process Version).At this step, a build is performed again and the resulting package is registered in the platform as an executable process. From this point, the status of the process version becomes “Release” and no changes may be applied to the implementation files anymore. The process version may be selected and integrated in Workflows (see Workflows Management in

Workflows Management).

Figure 40 Process Development Lifecycle¶

At any time a process may be shared (with all its existing and future

versions) with other platform users by adding it to one or more

workspaces. Instructions to do so are provided in

Process Sharing.

The following sections further describe each of the process management tasks.

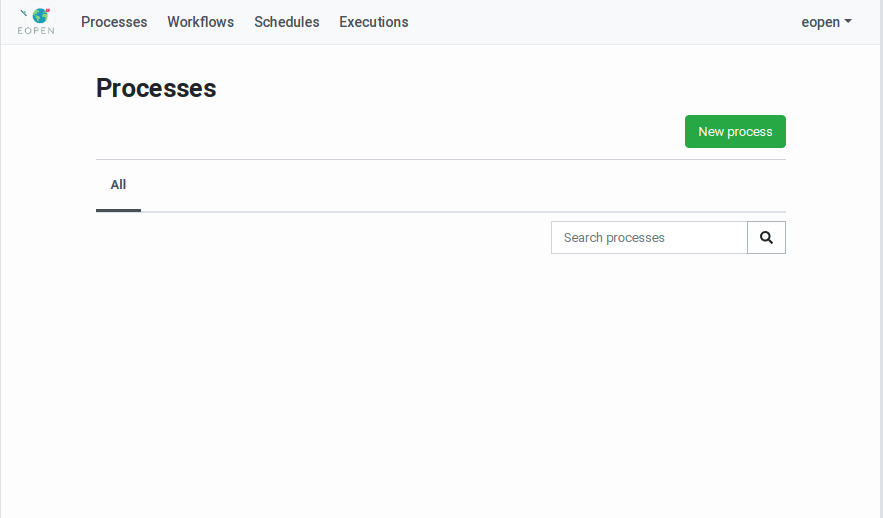

Processes Management Page¶

The Processes Management Page, titled Processes, gives access to the tools for creating, configuring and sharing processes.

As can be seen on Figure 41, the page contains the following elements:

A table of existing processes (accessible by the authenticated user).

Each process is prefixed with the name of its owner that is the user who has created it. Different user names may be present in the list as processes can be shared among users (see

Process Sharing).A field for applying a textual filter on the table

A New process button for creating a new process

Click on a process name to navigate to the Process Management Page as described in the next section.

Figure 41 Processes Management Page¶

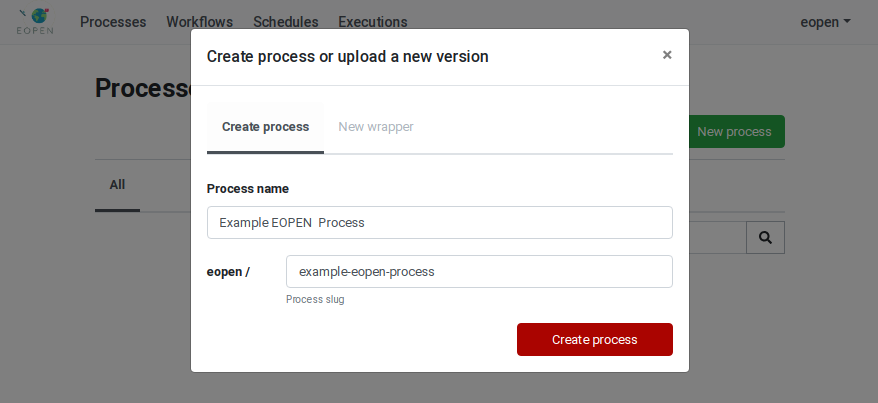

Creation of a New Process¶

Clicking on the New process button reveals a popup dialog box which asks for the name of the new process (see Figure 42). The process name is a free text that will be used to identify the process in the user interface. At the same time the name is entered, a “slugified” version of it is generated automatically and displayed in a second input field. It is in principle not necessary to edit this generated identifier but should it be necessary, care must be taken to enter an identifier that only contains lowercase characters, dashes and digits.

Figure 42 Creation of a new Process¶

Enter valid values then click on the Create process button to create the process. You are automatically redirected to the Process Management Page:

Figure 43 Process Management Page¶

On the screen, the process name is always prefixed with the name of its owner.

The Process Management Page also contains the following elements:

A Share this process button for configuring in which workspaces the process should be accessible (see

Process Sharing).- A New wrapper button for generating a process wrapper script (see

Generation of a Process Wrapper Script).

- A New version button for creating a new version of the process (see

Creation of a new Process Version).

The currently associated workspaces (not displayed if the process is not shared)

A drop‑down list with the existing process versions. Selecting a version in the list reveals information about the version as well as buttons for managing that version, as will be explained in the next section.

Creation of a new Process Version¶

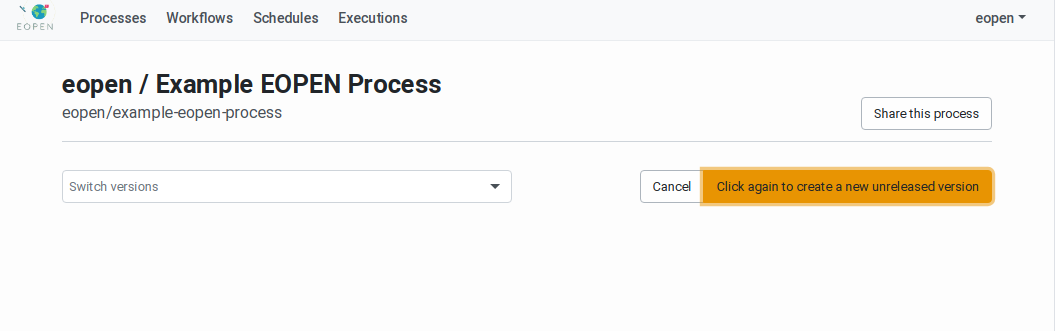

In order to create a new process version, navigate to the related Process Management Page. The page includes the list of existing Process Versions in a drop‑down list and a New version button. Click on this button once reveals a Click again … button. Click on it to confirm and start working on a new process version.

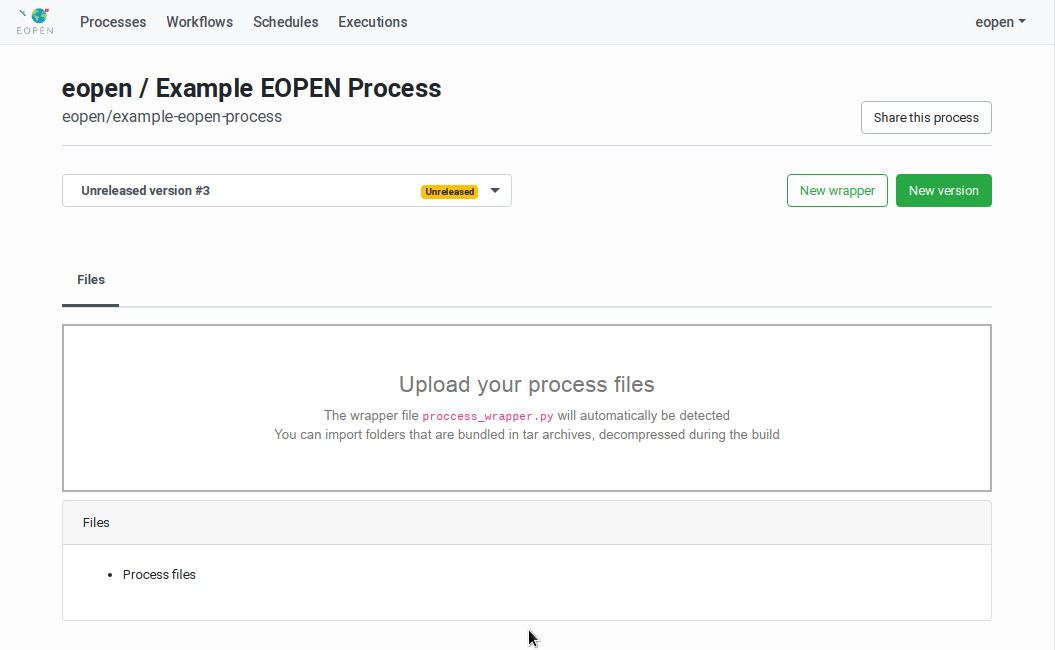

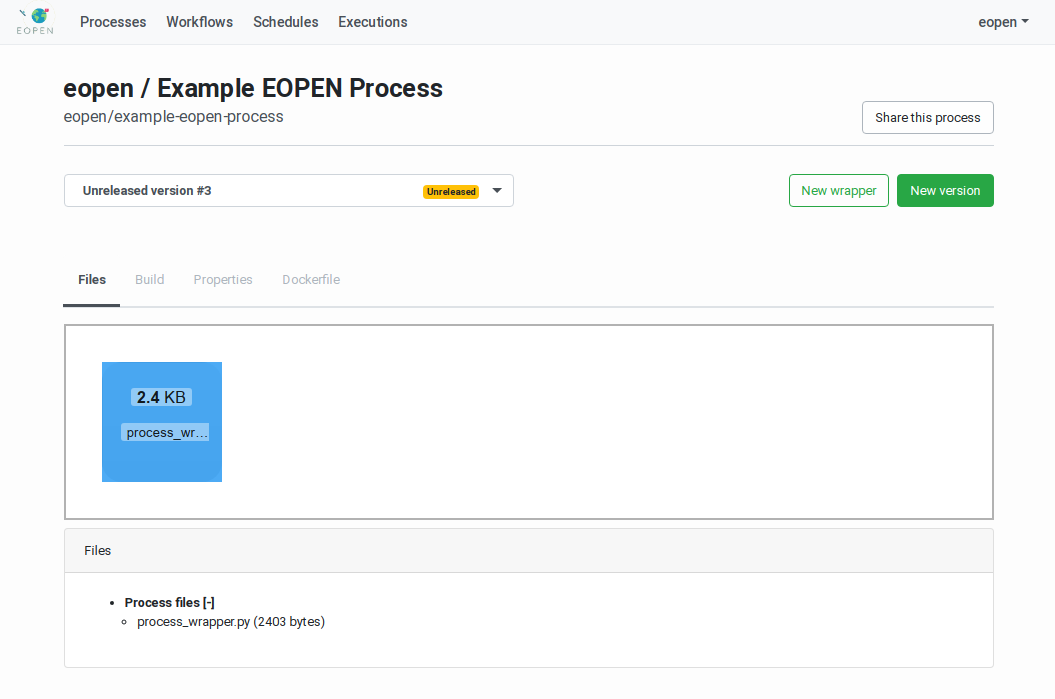

As shown on Figure 45, the process version has the

status “Unreleased”. The page now shows an area for uploading the

process version implementation files. Its usage is described in

Upload of the Process Version Implementation Files.

Figure 44 Process Management Page with New Version Confirmation Button¶

Figure 45 Process Management Page with Selected Version¶

Generation of a Process Wrapper Script¶

For each process version to be imported in the platform, it is necessary to generate a corresponding process wrapper script. This script acts as an adapter between the platform and the actual process implementation.

The creation of a wrapper script is facilitated by the Process Wrapper Script Generation page which may be accessed by selecting a particular process version and clicking on the New wrapper button.

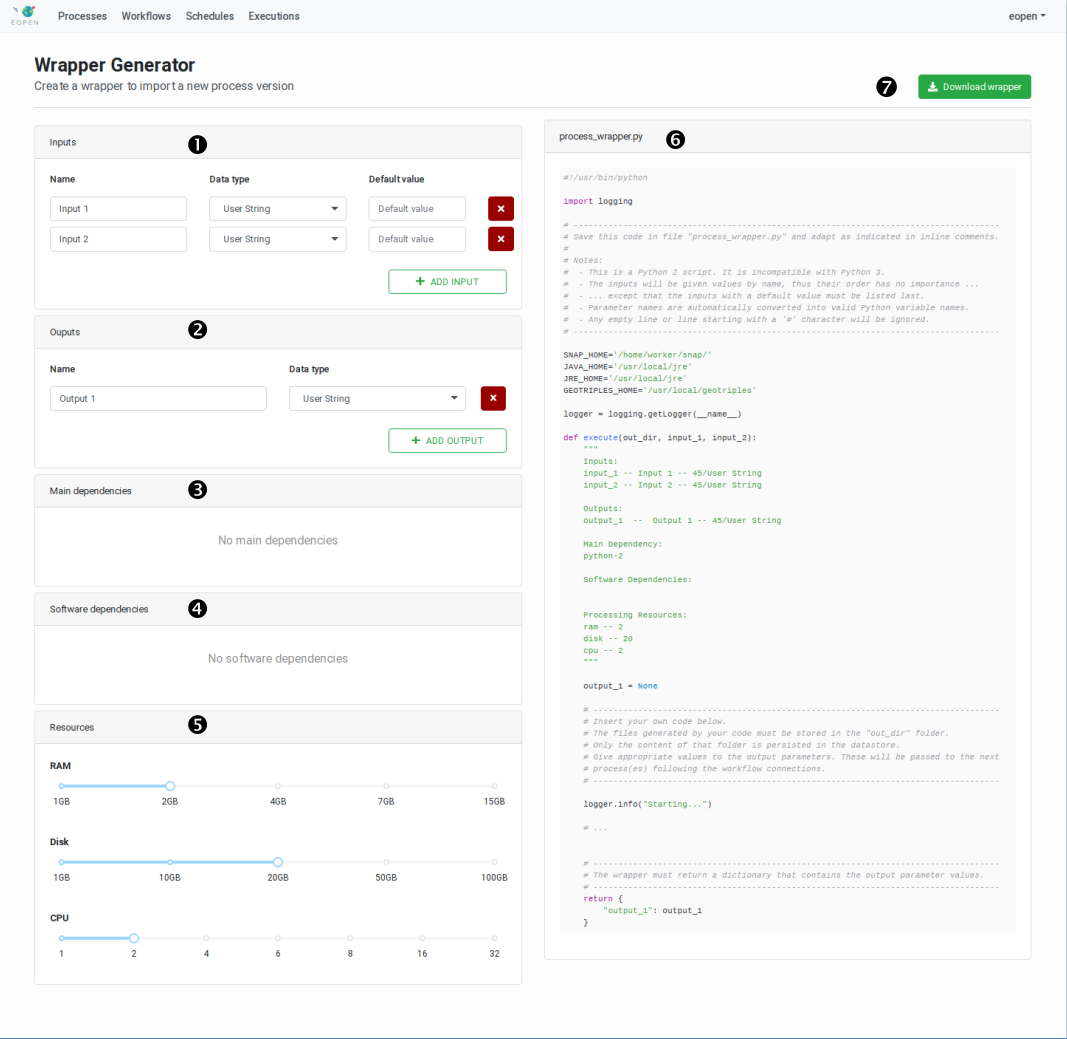

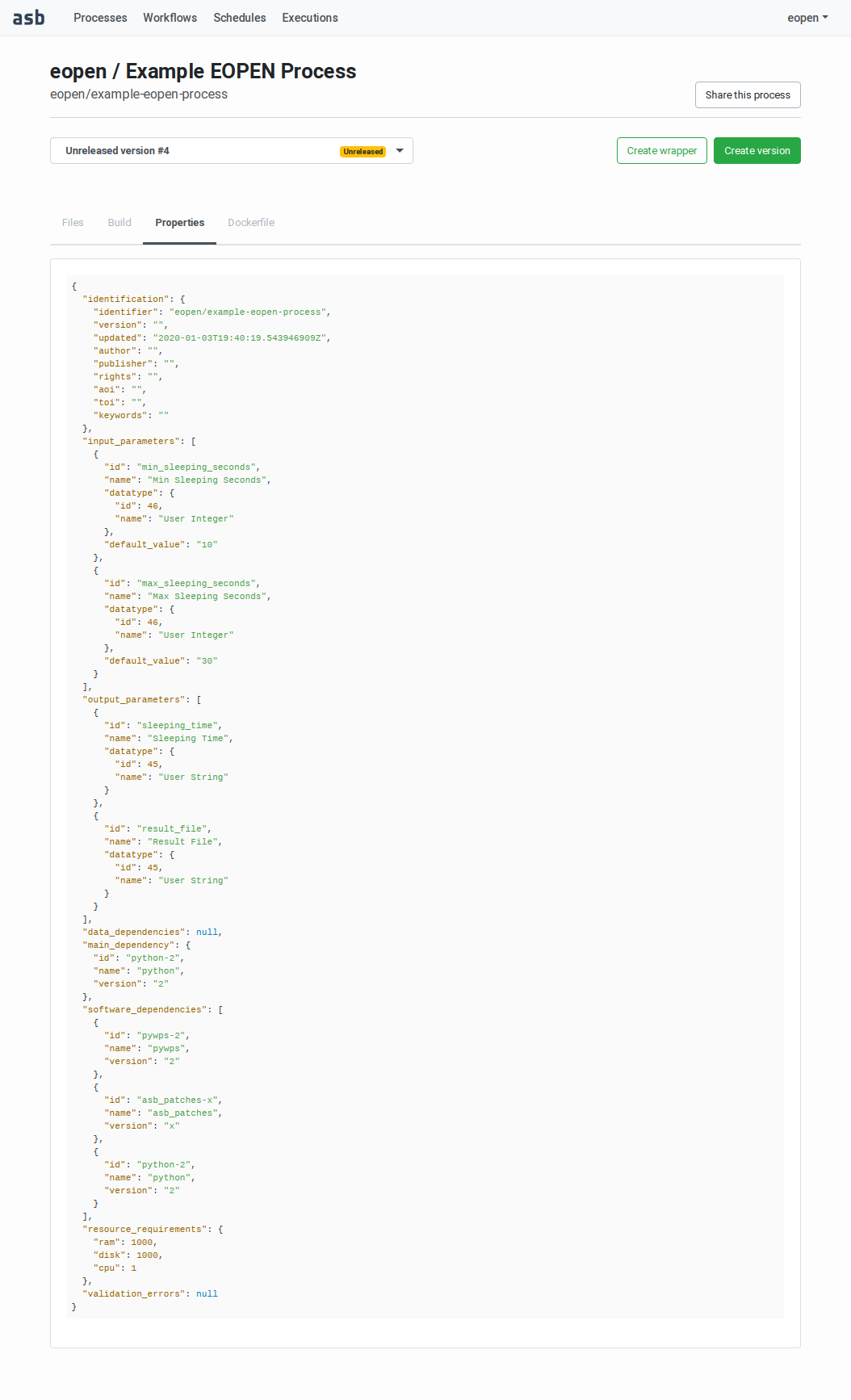

The page, represented on Figure 46, allows generating a preliminary version of the script. This must be downloaded and edited locally to include the call to the process algorithm (or to include the algorithm itself). The input parameters received by the wrapper function must be passed to the algorithm and the algorithm outputs must be returned by the wrapper function. Passing the input and output parameters must be performed in the appropriate manner, that is, according to the rules of the Python language.

The Process Wrapper Script Generation page is divided in two parts:

The left panel is occupied by a form that allows configuring the process. In particular, it allows declaring the list of input and output parameters, the software dependencies and the hardware dependencies.

The right panel contains the generated wrapper script. This is dynamically updated each time a modification is applied in the form on the left. For example, when a new input parameter is added in the form, this is inserted as an input to the execute functions, and when a new output parameter is added, a corresponding variable is created and initialised in the function, and included in the returned dictionary.

Figure 46 Process Wrapper Script Generation Page¶

The Process Wrapper Script Generator page displays the following elements:

1 |

Definition of the input parameters. Click on the ADD INPUT button to declare a new input parameter. Give a name and optionally a default value to each input in the list and associate them to a data type. |

2 |

Definition of the output parameters. Click on the ADD OUTPUT button to declare a new output parameter. Give a name to each output in the list and associate them to a data type. |

3 |

Select in this panel the main software product required to run the algorithm. For example, select “R Interpreter and Libraries” if at least part of the algorithm is implemented in the R scripting language, or select “SNAP + Sentinel Toolbox v7.0” if the algorithm makes use of the SNAP command line tools (including GPT and Snappy). |

4 |

Select here the additional software packages your algorithm depends on. Python is installed by default in all process Docker images. Several entries may be selected but, for example, it doesn’t really make sense to install more than one version of the Java runtime environment. The selected packages will be installed in the process Docker image at import time. The amount of selected dependencies thus impacts the time required to build these images. |

5 |

Indicate here the minimum amount of hardware resources required to run the process. The process will not be deployed and executed in a node that has less than the required resources available. If more resources than required are available (e.g. 4 CPUs required but 8 available), the process will have the possibility to use them. |

6 |

The right half of the page shows the dynamically generated process wrapper template. This is updated automatically when new values are entered or selected in the form on the left. |

7 |

The Download wrapper button triggers a download of the process wrapper template. This is named “process_wrapper.py” by default. Depending on the Web browser configuration, the download happens automatically or the target location is asked at first. |

Enter the necessary information in the form then click on Download wrapper to save the generated process wrapper template next to your algorithm files (if any).

Important: The name of the file (process_wrapper.py) must be preserved otherwise it will not be recognized as a wrapper script at import time.

Edit the “process_wrapper.py” file and add within the “execute” function the Python 3 code that takes the function input values, run your algorithm, and returns the execution results. If the code of the algorithm is short enough, it may be directly integrated within the “execute” function.

When this is done, the algorithm files and the “process_wrapper.py” file

should be tested locally (see Testing Processes Off‑line) then

uploaded in the platform. These operations are described in the next

sections.

Upload of the Process Version Implementation Files¶

When a process version is selected in the Process Management Page the page displays a panel titled “Files” (see Figure 45). The panel contains a “drop‑off” zone that allows to drag and drop the process version implementation files directly on the page. Alternatively, clicking on the zone opens a dialog box that allows navigating and selecting the files to upload.

Each time one or more files are dropped or selected for upload, these are sent to the server where they are analysed. If one of these files is named process_wrapper.py and contains a process wrapper script, this is parsed and the process properties extracted.

Note 1: Several files, organised in a folder hierarchy may be uploaded as

well. Instructions to do so are provided in Importing a Folder Hierarchy.

Note 2: Additional Python packages may be installed automatically in

the Docker image by uploading with the process version implementation files

a “requirements.txt” file that will be provided to the standard “pip” tool.

This is further described in section Installing additional Python Packages.

When a valid process_wrapper.py file is uploaded, possibly with additional implementation files, new tabs become available:

Build: Use this tab to package the files and release the process version in the platform as explained in

Building the Process VersionandReleasing a Process Version. (Figure 48).Properties: Use this tab to visualise the process version metadata (encoded in JSON) as extracted from the process_wrapper.py file (Figure 49).

Figure 47 Process Version showing the uploaded files¶

Figure 48 Process Version showing the Build panel¶

Figure 49 Process Version showing the process properties¶

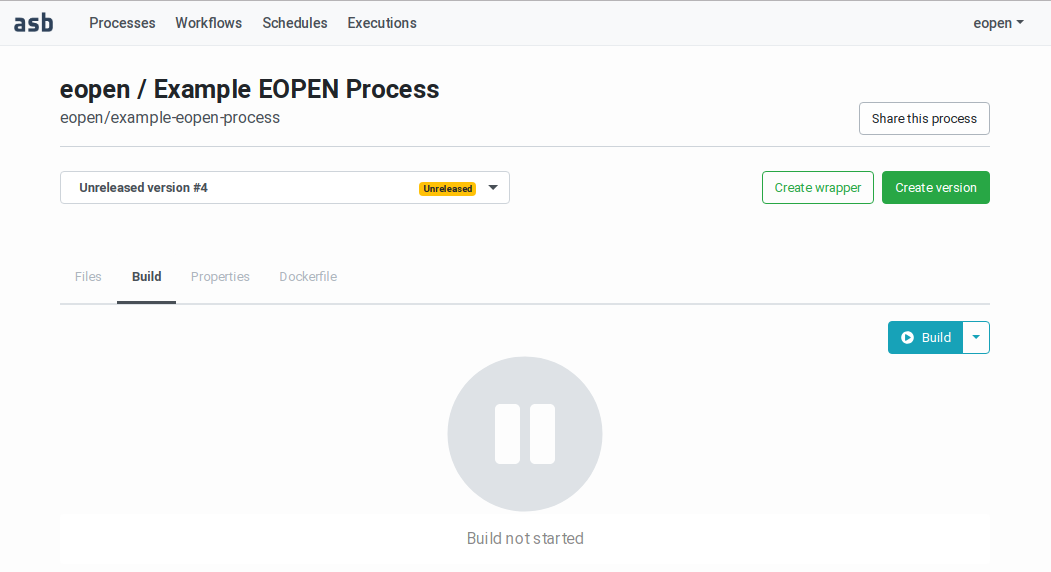

Building the Process Version¶

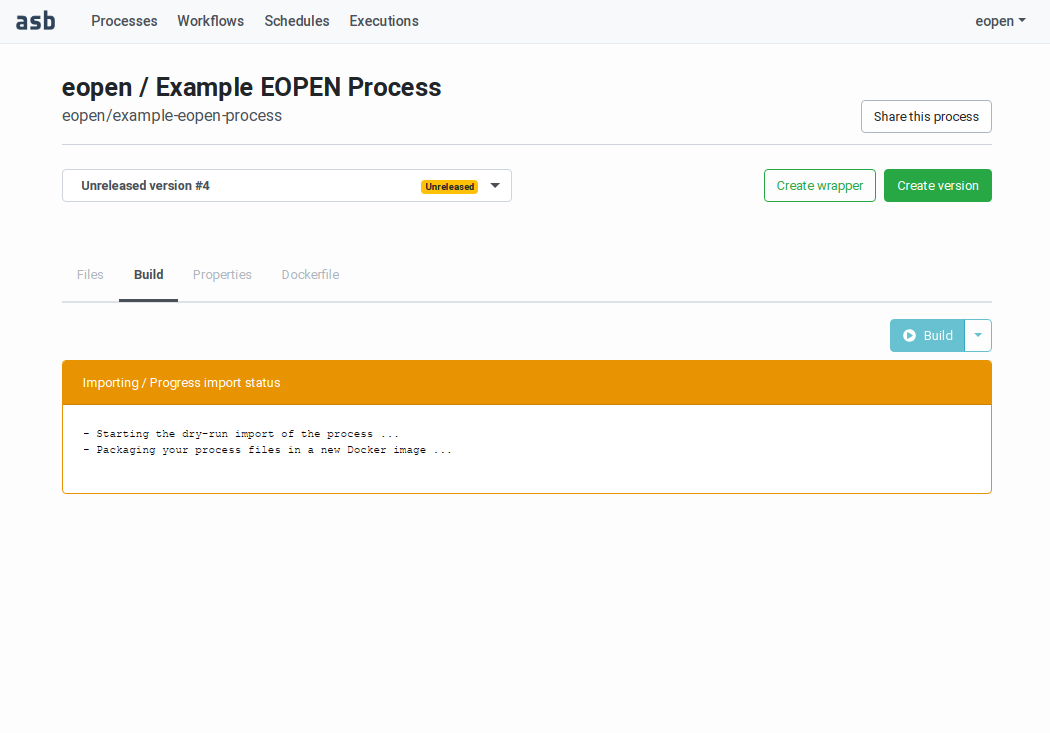

To build a new process version, navigate to the related Process Management page, select the version to build in the drop‑down list then click on the “Build” tab. The build panel is then displayed as shown on Figure 48.

Note that this tab is only accessible if a valid process wrapper script has been uploaded, as explained above.

The “Build” tab contains a Build button. Clicking on it starts a build and a release dry‑run. As can be seen on Figure 50, the page displays messages that inform about the progress of the dry‑run steps. The messages are pushed from the server to the browser and thus appear automatically in real‑time.

The build operations may take some time, varying with the amount of software dependencies selected in the Process Wrapper Script Generation page. Each additional software dependency represents one or more installation operations to be added in the build script of the target Docker image.

Figure 50 Building a Process Version (on‑going build)¶

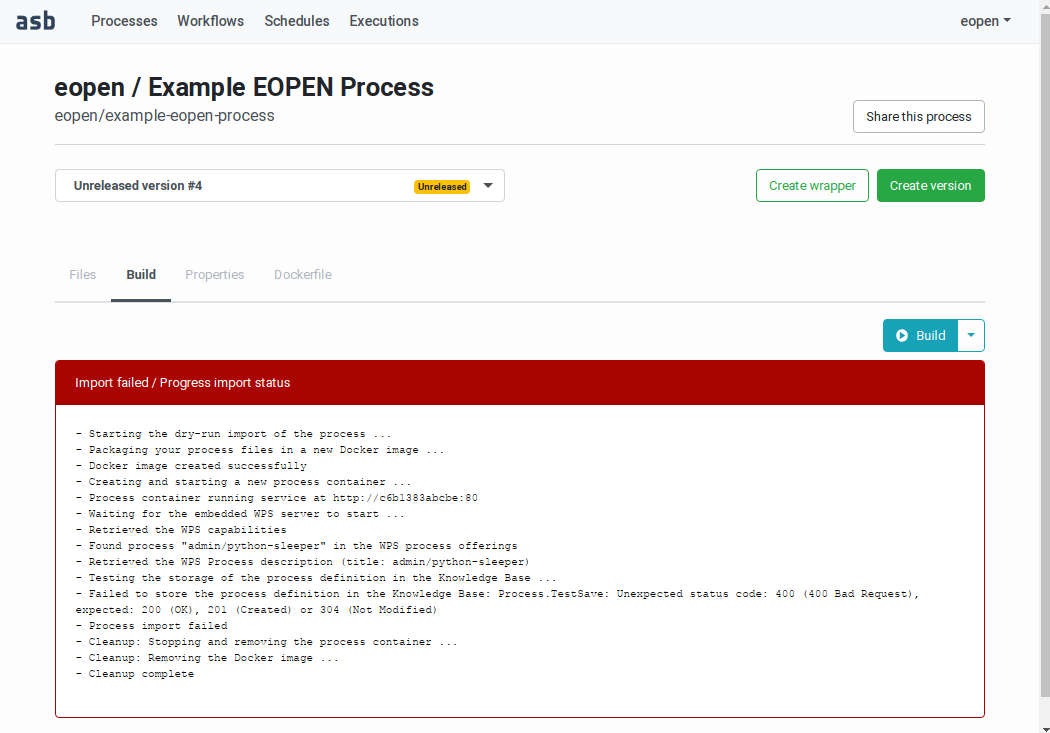

If the build or the release dry‑run fails, the panel turns red and a description of the error is displayed, as shown on Figure 51:

Figure 51 Building a Process Version (failed build)¶

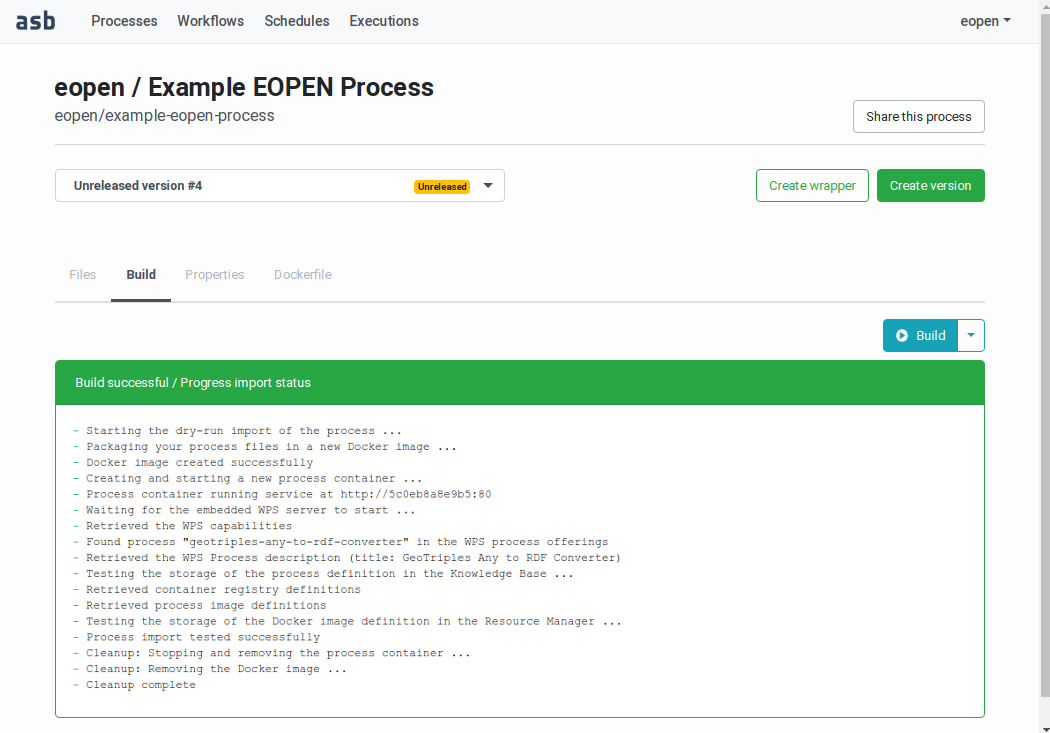

If the dry‑run import is successful, a green panel is displayed, as shown on Figure 52:

Figure 52 Building a Process Version (successful build)¶

Releasing a Process Version¶

A process version with a valid wrapper script and which successfully passed the build and release dry‑run is “Releasable”. At this time, it is still possible to upload new implementation files (or new versions of existing implementation files). Doing so “downgrades” the process version status back to “Buildable” as a new dry‑run is necessary to ensure the process version and its implementation files are valid.

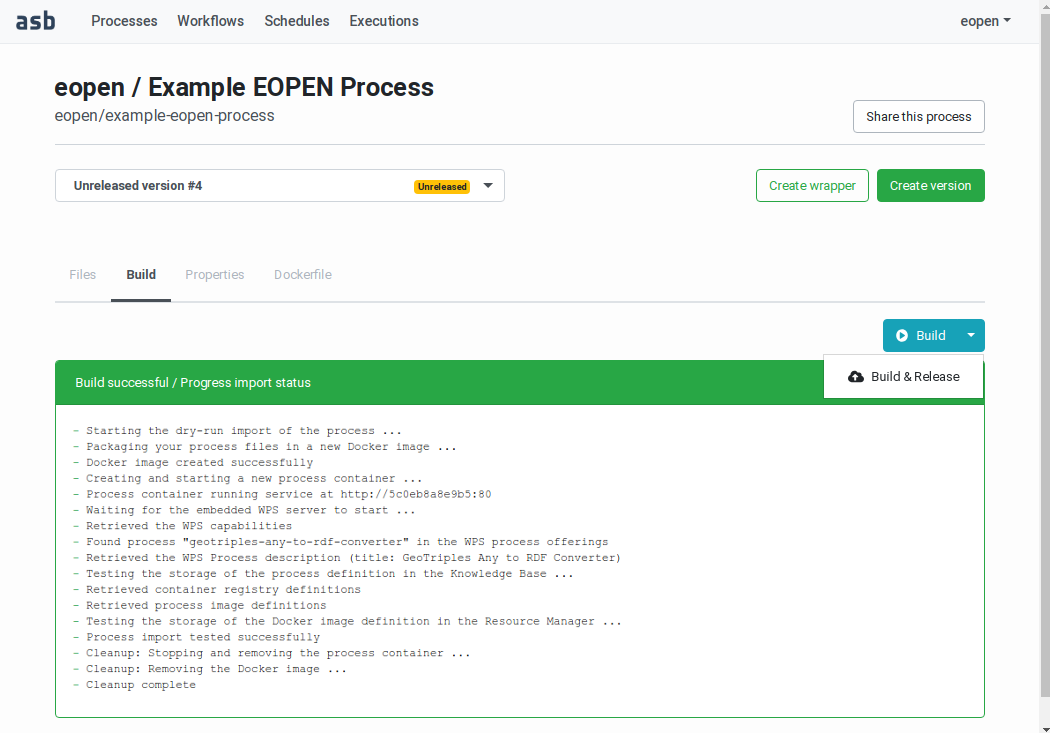

To release a new process version, navigate to the related Process Management page, select the version to release in the drop‑down list then click on the “Build” tab.

The “Build” tab contains a Build button and an associated menu, as shown on Figure 53. Expand the menu and select the Build & Release entry. A build is started again and if successful the process version is imported and registered in the platform. Its status becomes “Released” and from now on it is not possible anymore to modify its implementation files.

Figure 53 Releasing a Process Version¶

Once release, a process version may be selected and integrated in

processing Workflows, as described in Workflow Editing, below.

Process Sharing¶

By default, a Process is only visible and usable by its owner, that is, the user who created it.

In order to share a Process with other platform users, this must be added in a workspace accessible by these users (that is, a workspace in which they have a role).

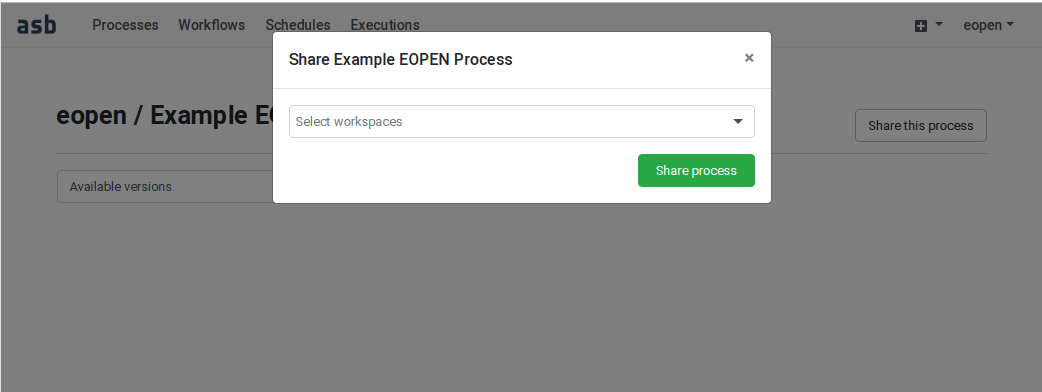

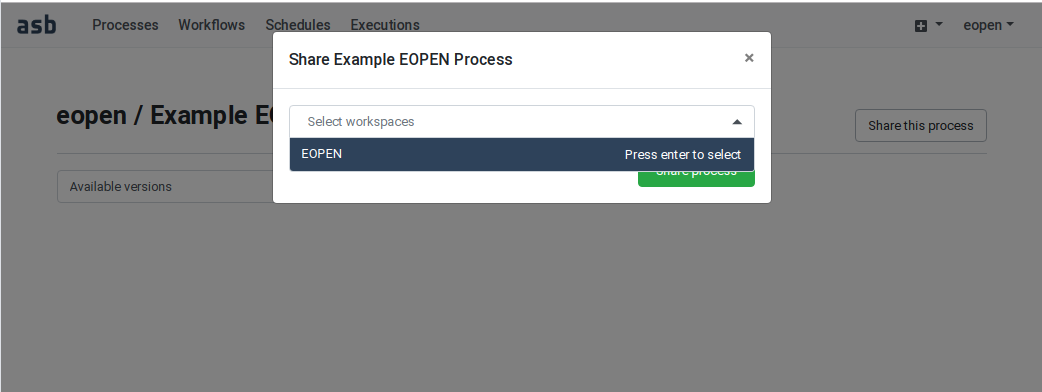

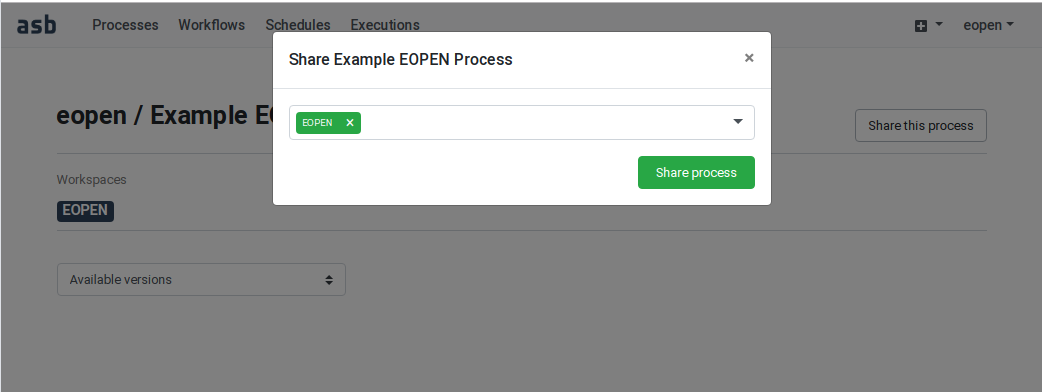

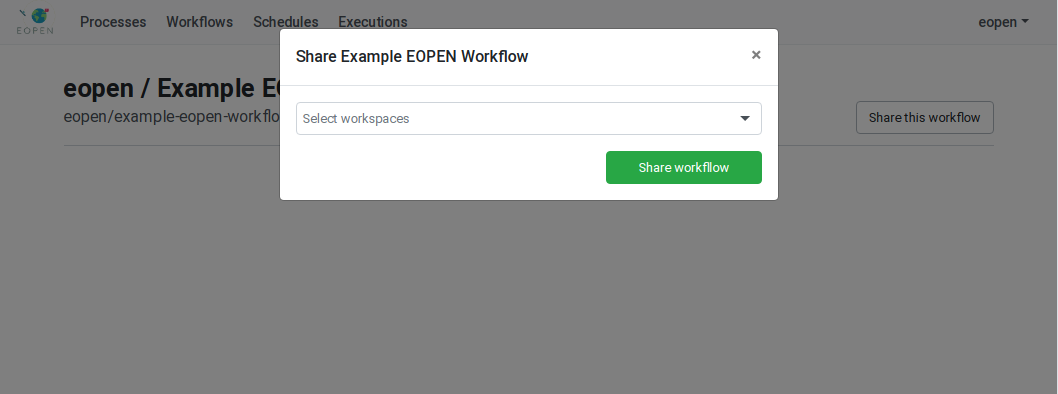

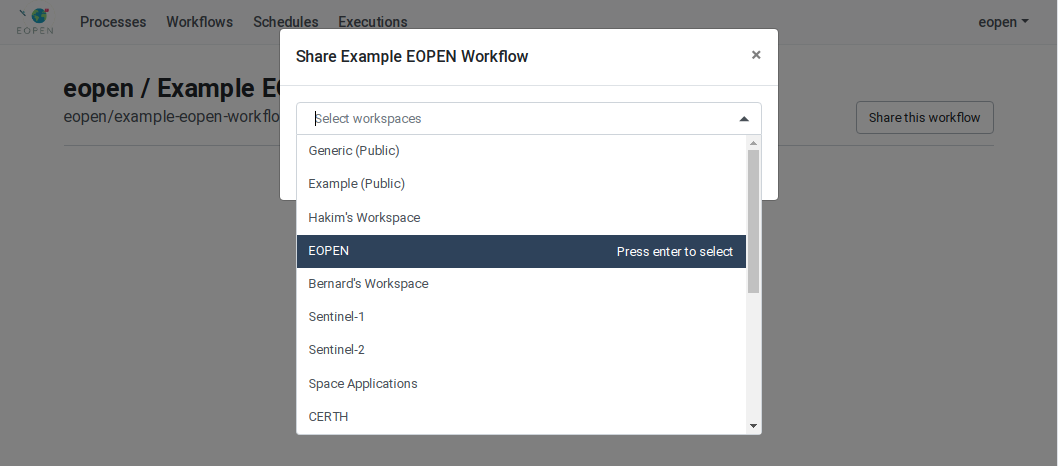

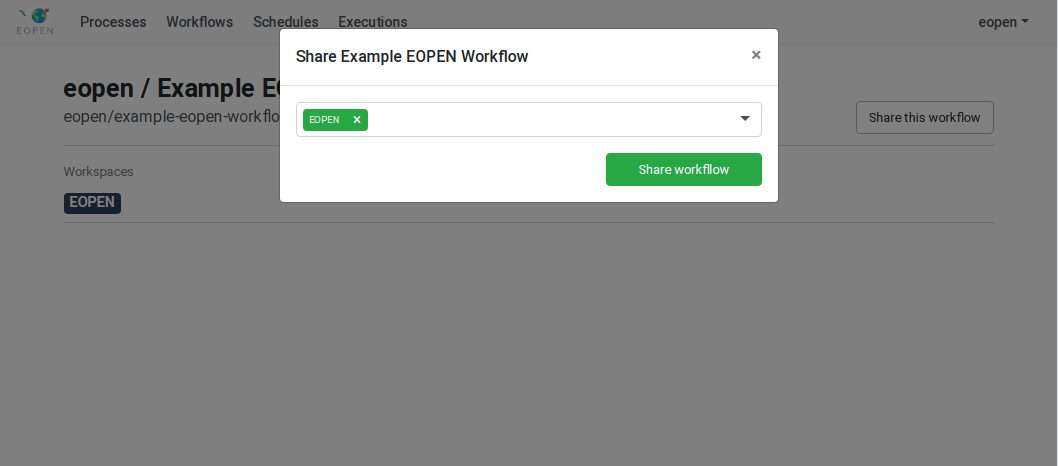

In the following example, user “eopen” wants to share his Process “Example EOPEN Process” with the users who are given access to workspace “EOPEN”.

As can be seen on Figure 54, the process is not yet assigned to any workspace. To do this, click on the Share the process button. A popup dialog box appears. This allows selecting the workspace(s) the process must be assigned to. Workspaces must be selected in a drop‑down list. Once selected, a workspace may be removed by clicking on its “X” icon. The sequence of operations is depicted in Figure 55.

Figure 54 Process Management Page¶

Figure 55 Process Sharing Operations¶

Figure 56 Process Sharing Operations¶

Figure 57 Process Sharing Operations¶

Process Implementation Guidelines¶

The following sections provide hints and guidelines for implementing processes. The tool for building new process versions is not currently verbose in describing what is causing an error during the actual import operations. The guidelines also helps indicating what could have been wrong in case of error.

Testing Processes Off‑line¶

The import mechanism (during the dry‑run and the actual import) includes the generation of a Docker image, the instantiation and execution of an instance of it (as a container), and the verification that the imported process is present in the offerings on the embedded OGC WPS service. This requires the process_wrapper.py file to be imported and parsed by the Python interpreter. If the Process Import Tool complains that the process cannot be found, the most probable cause is that the process wrapper file could not be imported. This may be due to a syntax issue (such as invalid indentation), or the import of a missing library or module.

It is thus advised, in order to prevent most of the errors that may occur at import time, to at first try to import and execute (if the dependencies are available), the wrapper code locally, in a controlled environment.

Example of local execution:

Python 3.7.7 (default, Apr 23 2020, 14:43:26)

[GCC 8.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from process_wrapper import execute

>>> execute('/tmp', 'ignored')

{'events': {u'events': []}}

All Input Parameters are Strings¶

The Process Import Tool requires each input and output parameter to be associated to a data type. This data type is used by the platform to verify whether the inter‑connected parameters in the workflows are compatible or not. For example, the Workflow Editor will allow connecting two parameters of the same type (object, string, date, etc.) or an output of type string with an input of type object (as a string is an object), but not an output of type object with an input of type string (as an object is not necessarily a string).

In case of doubt, selecting “User String” is a safe default choice.

The data type of the process parameters is however not used in the processes themselves. Concretely, all the inputs to the execute(…) function in the process wrapper module are passed as strings.

It is up to the developer to convert (in a safe manner) the input values to their expected datatype.

# Testing the type of a variable

value_type = type(any_value)

# Convert string to integer

int_value = int(string_value)

# Convert string value to float

float_value = float(string_value)

# Convert any Python structure (including arrays and dictionaries)

# Note: this is potentially dangerous at string_value is interpreted

parsed_value = eval(string_value)

Importing Python Libraries¶

The process wrapper module may require additional libraries and modules to be imported depending on the processing needs. As the Python language permits it, the import statements may be added either in the main context (i.e. at the top of the file according to the official recommendations), or within code blocs.

Importing at the top of the file permits to verify that the imported element (library, module, function, etc.) is indeed available within the Docker image. If the import statement is located, for example, within the execute(…) function, this will not be evaluated at process import time but when the process will be deployed and executed during a workflow execution. In this case potentially missing dependencies will be detected at execution time instead of process import time.

Importing and Using GDAL Libraries¶

Note about GDAL libraries: in the recent versions of the GDAL Python bindings, direct import of the GDAL libraries has been removed. This is shortly explained here: https://pypi.org/project/GDAL/. As of writing this guide, GDAL version 2.4.0 is installed when the dependency is selected in the Process Import Tool.

The following code fragment shows the proper way to import GDAL libraries that is through the osgeo package:

# Before:

#import gdal

#from gdalconst import *

#from osgeo import osr

# Now:

from osgeo import gdal

from osgeo.gdalconst import *

from osgeo import osr

Using SNAP 7 Tools GPT and Snappy¶

In this second EOPEN platform prototype the version of the Sentinel Application Platform SNAP (https://step.esa.int/main/toolboxes/snap/) has been upgraded from version 6 to version 7. This release uses a new version of the Java virtual machine that has better compliancy with the containerisation technology.

The version of SNAP available at the time this document has been produced is 7.0.0 (22.07.2019 13:30 UTC) originating from: https://step.esa.int/main/download/snap-download/

To use SNAP 7 in a process, select SNAP Sentinel‑1/2/3 Toolbox v7.0 + Snappy as Main Dependency in the Process Wrapper Script Generation page. This translates into the following entry in the wrapper script:

Main Dependency:

snap_sen_tbx-7.0

The use of GPT and Snappy in SNAP version 7 remains unchanged compared

to version 6.0. An example process wrapper script that uses GPT is

provided in Appendix %s.

Use GPU Capabilities¶

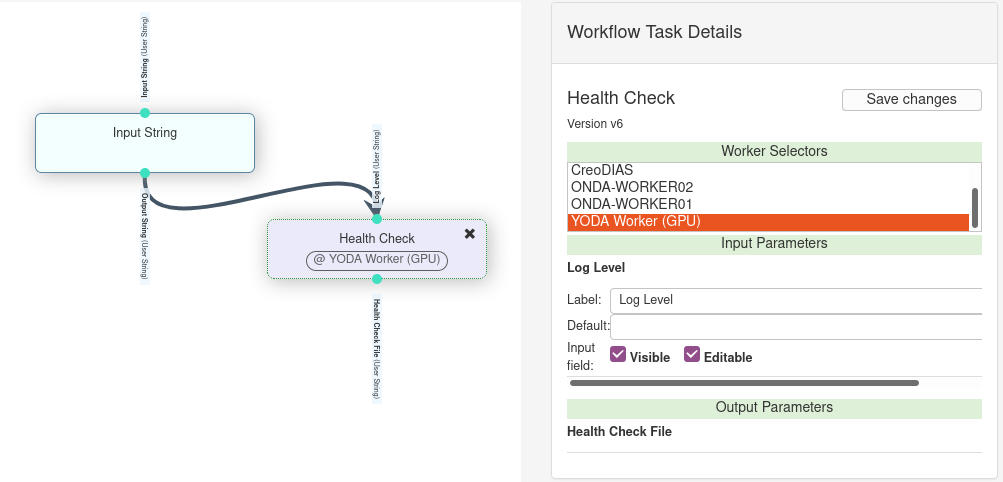

To run a process that beneficiates from GPU hardware, you need to do the following:

Release your process version by indicating the number of GPUs required for your algorithm

At runtime tag your workflow processes with a gpu-enabled worker

Release your process version with GPU enabled

In the wrapper generator or directly in your wrapper script include the following line under Processing Resources:

gpu -- 1

This indicates that your process version requires 1 GPU. Note that GPU are integer resources, this means that you should never put a floating point for GPU resources. If a floating value is provided, the wrapper parsing will fail.

Flag your workflow processes

In the workflow tag the processes, as described in Worker Selectors,

that require GPU with a gpu-enabled worker.

For example use the YODA Worker (GPU) selector to run your processes on SpaceApps’ GPU-enabled worker.

Using the Local Folder¶

The files that are imported via the Process Import Tool are located in folder /asb/user in the Docker images. This is not the working folder of the Python interpreter that runs the OGC WPS service which means trying to access a local file from a Python script without providing at least a path fragment will not work.

For example, consider a file settings.json uploaded together with process_wrapper.py and loaded by the latter. The following code will fail because the JSON file cannot be found:

# Read process settings from JSON file

with open('settings.json') as settings_file:

data = json.load(settings_file)

The following error is raised at execution time:

IOError: [Errno 2] No such file or directory: 'settings.json'

The absolute path to the file must be included, as follows:

# Read process settings from JSON file

with open('/asb/user/settings.json') as settings_file:

data = json.load(settings_file)

Using the out_dir Folder¶

The execute(…) function defined in each process wrapper receives an out_dir parameter. For example:

def execute(out_dir, startdate, enddate, writelogfile='False'):

pass

This parameter is given the absolute path to the folder whose content is persisted after the completion of the process. Any file or folder located outside this folder will be lost.

out_dir must be used to save the files that are needed by the processes connected downstream in the workflow. In that case, the process that stores a file must output its path (and file name as appropriate) and pass this value to the next processes through connected parameters.

Please note that the path is edited automatically and is not the same inside and outside the processes execution environment. It is thus important to pass them through output/input parameter connections.

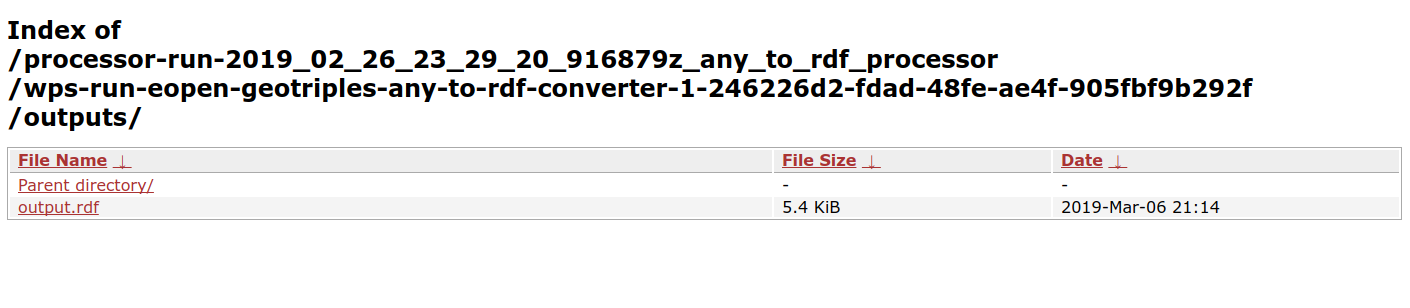

Files stored in out_dir are accessible by the users after the completion

of the related workflow through the Execution Report page, as explained

in Workflow Execution Report.

Importing a Folder Hierarchy¶

As explained in Using the Local Folder all the files

imported using the Web interface of the Process Import Tool are located

in the same folder in the generated Docker image. Archive files (tar and tar.gz files) may

also be uploaded for importing a big amount of files or a folder

hierarchy as these are automatically unpacked in the generated Docker

images.

For example, importing a tar file with the following content:

appfiles/__init__.py

appfiles/utils.py

allows importing the appfiles/utils.py module in a process wrapper as follows:

# Importing the whole utils module

from appfiles import utils

# Importing a particular function

From appfiles.utils import utility_function

This mechanism is not limited to Python packages. Any files required by the process may be included in the Docker image using tar file.

Installing additional Python Packages¶

Python packages that are not available in the proposed software dependencies may be installed on‑demand in the process Docker images by using the standard “pip” tool (https://pip.pypa.io/) and a “requirements.txt” file. This mechanism is automatic. If a file named requirements.txt is uploaded with the process version implementation files, the pip tool is executed and the file is provided as input.

The requirements.txt file is a text file that specifies the name of a Python package per line. Constraints may be expressed to force a given version of a package to be installed.

Note: As explained in Testing Processes Off‑line,

it is good practice to test a process off‑line at first.

This allows to save a lot of time,

especially when the process build and import fails for simple mistakes.

It is thus also advised to test your requirements.txt

file off-line by executing pip locally as follows:

pip install –r requirements.txt

Example requirements.txt file:

# For development

#ipython==3.1.0

# Django

djangorestframework==3.3.3

django-autocomplete-light>=2.0,<3.0

django-rest-swagger==0.3.7

The syntax of the requirements.txt file is fully described in the pip user guide: https://pip.pypa.io/en/stable/user_guide/#requirements-files.

Using the Python Logger¶

The Python logging module is configured to store in a file the log traces generated by the process modules. Each executed process generates a log file that is made available in the corresponding logs folder accessible through the Execution Report pages.

By default, the logging level is set to DEBUG. This may be changed programmatically as shown in the example, below.

Use the Python logger in the usual manner, as follows:

import logging

logger = logging.getLogger(__name__)

# Change the loggging level, if necessary

logger.setLevel(logging.DEBUG).

# Log info, warning, error, debug messages

logger.info('Execution started')

logger.warning('Something may be wrong')

logger.error('Something went wrong')

logger.debug('Received the following data from the service: %s', data)

Should it be necessary, the advanced functions of the logging module may be used as described in the Python documentation: https://docs.python.org/3/library/logging.html.

Main versus Software Dependencies¶

The Process Wrapper Template Generator page of the Process Import Tool allows selecting a Main Dependency as well as a number of Software Dependencies. Because the software dependencies are installed in the Docker images at the process import time, the complete operation may last several minutes. And the more individual software dependencies are selected, the longer is the Docker image building step.

To mitigate this, a number of “main dependencies” are proposed. Each of these correspond to a pre‑built Docker image that already contains a number of software packages and that may be used as parent for the process image.

For example, selecting the “SNAP Sentinel‑1/2/3 Toolbox v7.0 + Snappy” image allows saving the time to download, install and update the SNAP software in the process image. The same applies to the “R interpreter and libraries” image which already contains all the tools necessary for executing R scripts.

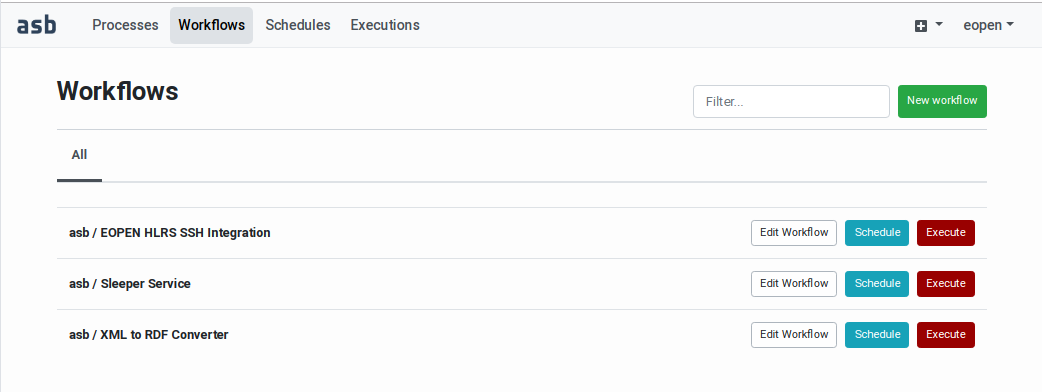

Workflows Management¶

Selecting the Workflows tab in the header leads you to the Workflows

Management page. As shown on Figure 58, the page shows

in a table your own workflows as well as the workflows you are allowed

to access, depending on the workspaces configuration (as explained in

Workflow Sharing).

An input field located above the table allows filtering the workflows. Next to it, a New workflow button allows creating a new workflow (as described in the next section).

Each row in the table includes the following elements:

The owner (creator) of the workflow

The workflow name

An Edit Workflow button leading to the Workflow Editor (see

Workflow Editing)A Schedule button for configuring unattended execution(s) in the future (see

Workflow Executions Scheduling)A Execute button for initiating an on‑demand (immediate) execution (see

Workflow On‑Demand Execution)

Figure 58 Workflows Management Page¶

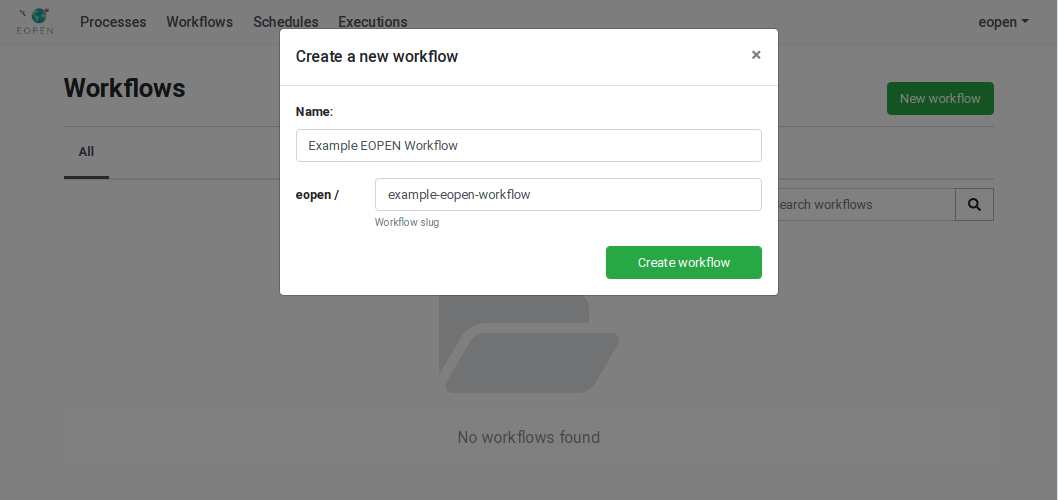

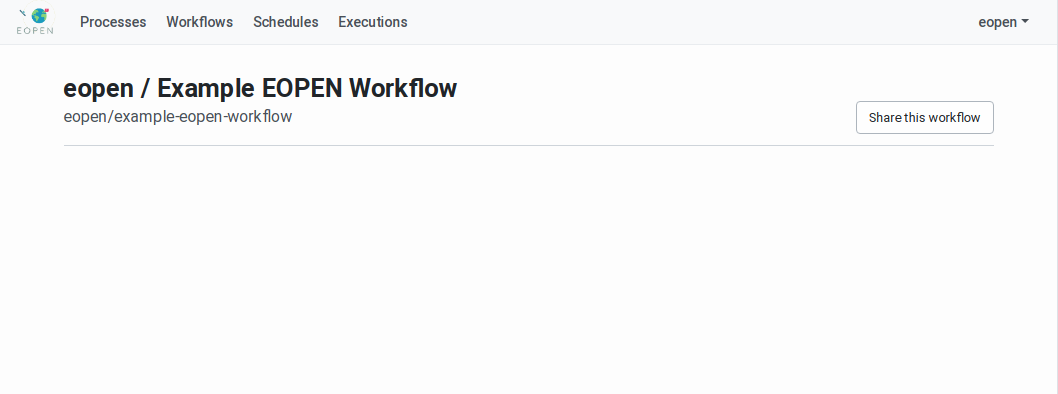

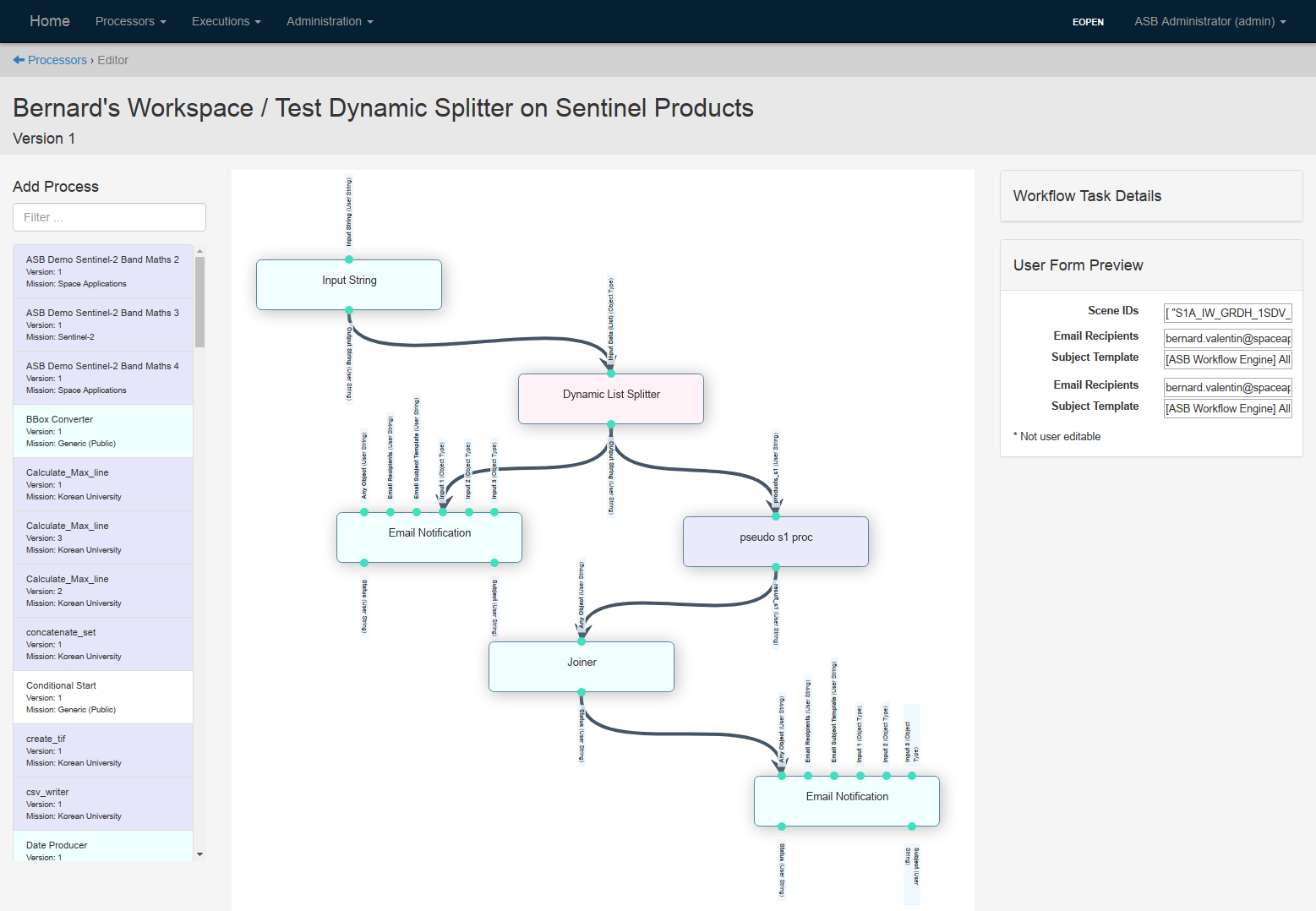

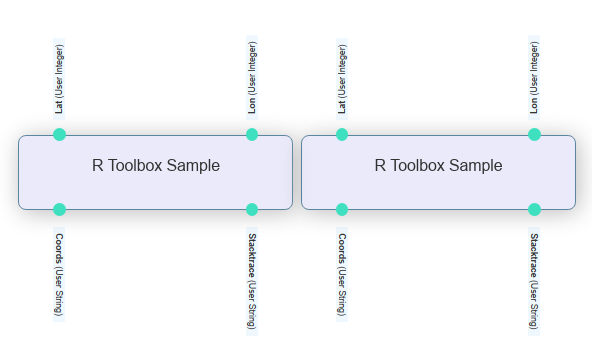

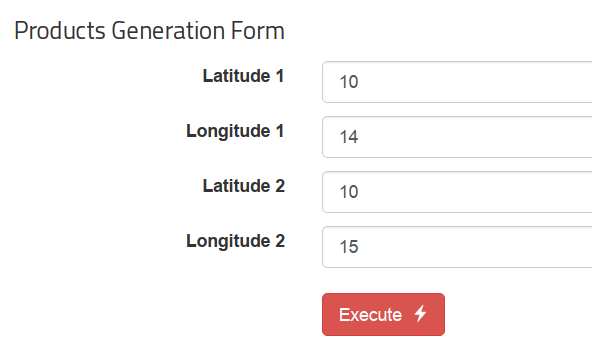

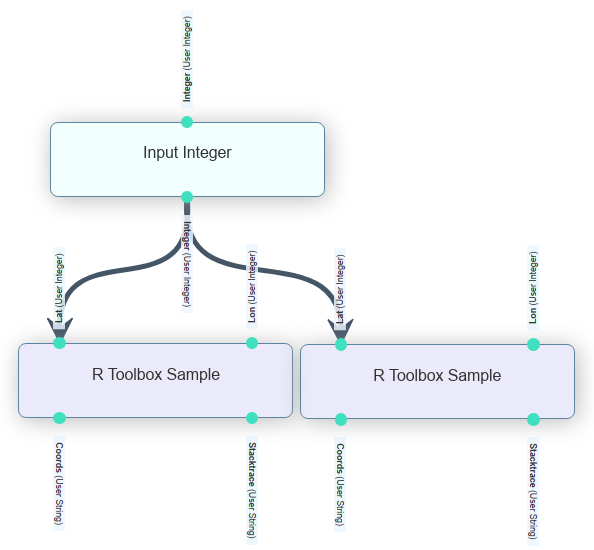

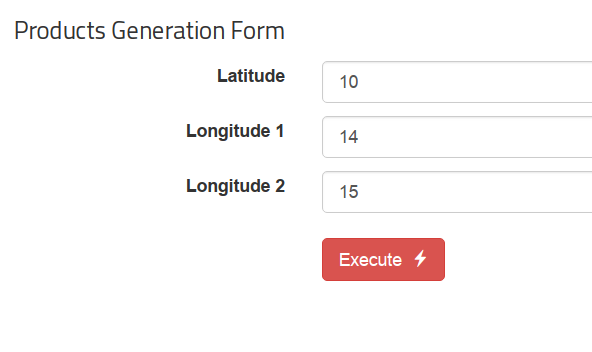

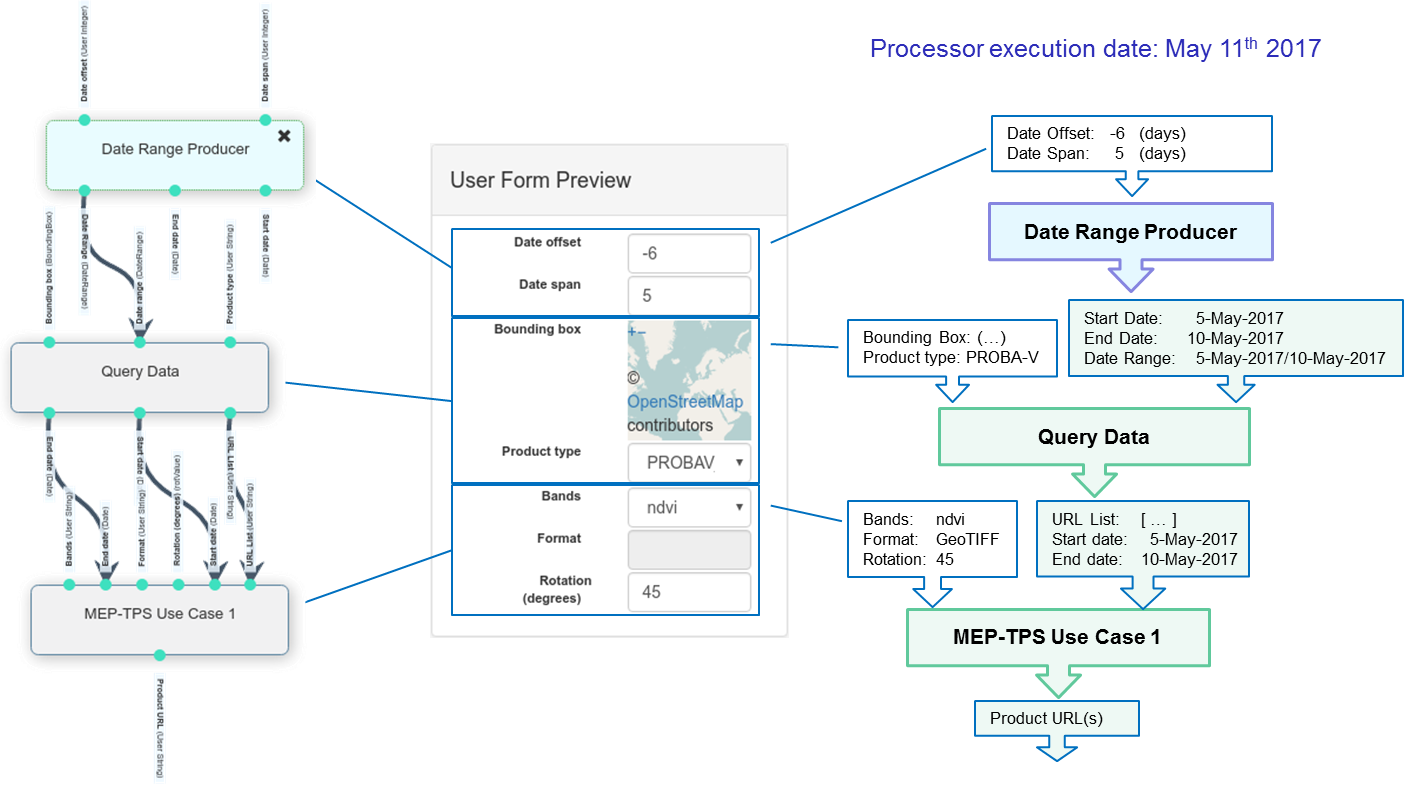

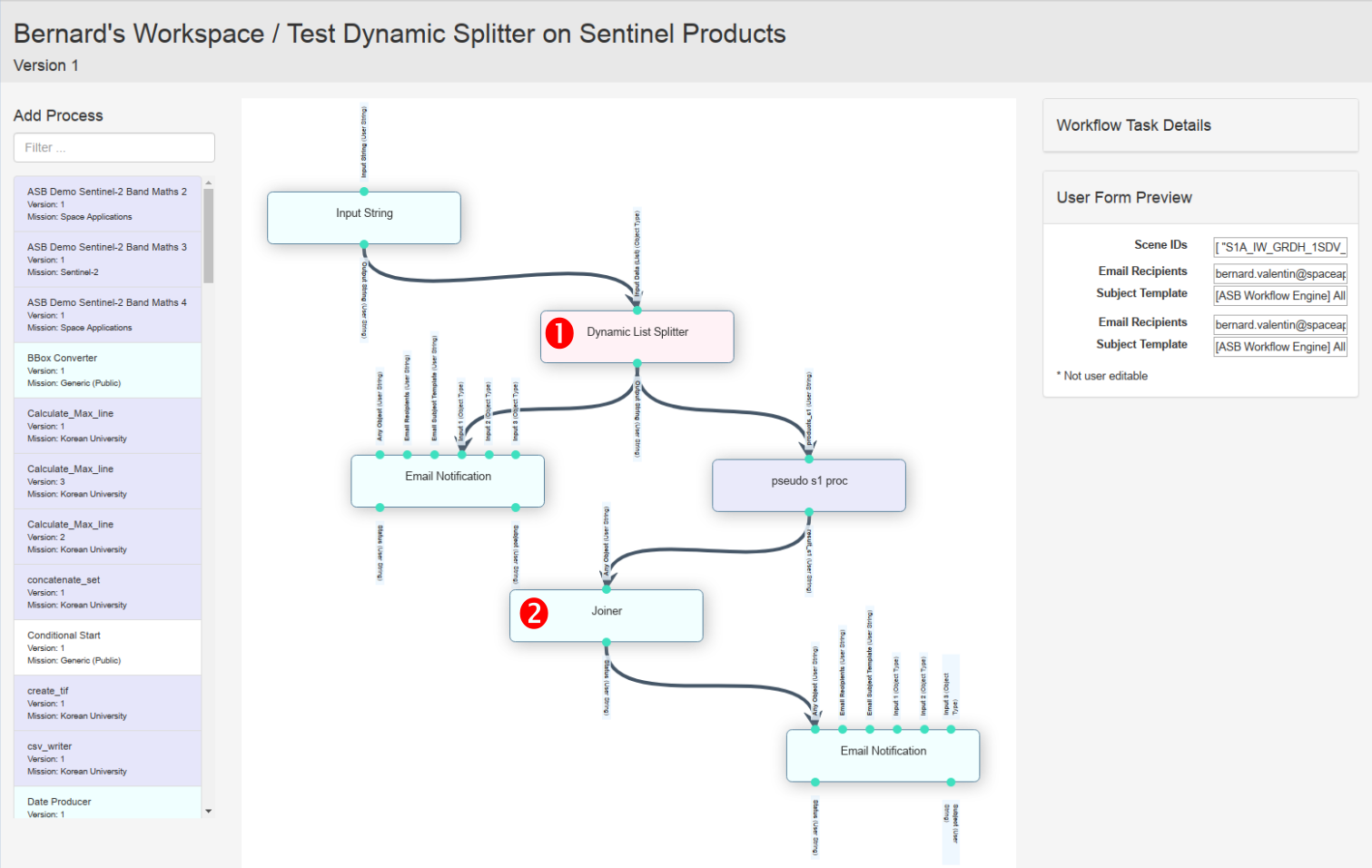

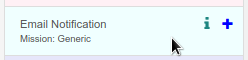

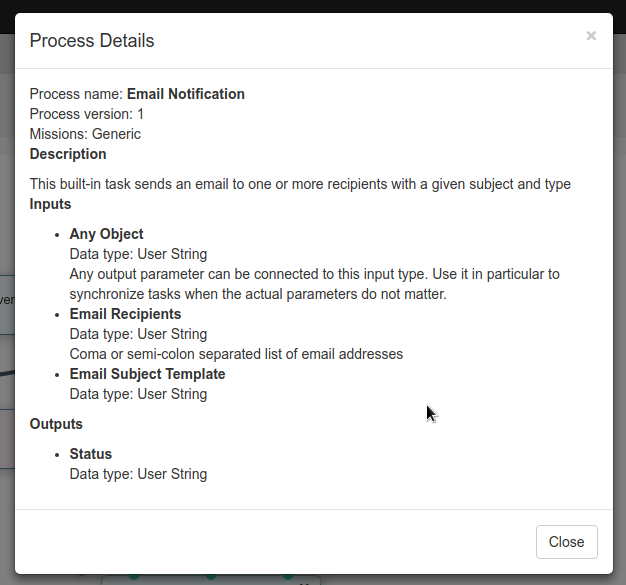

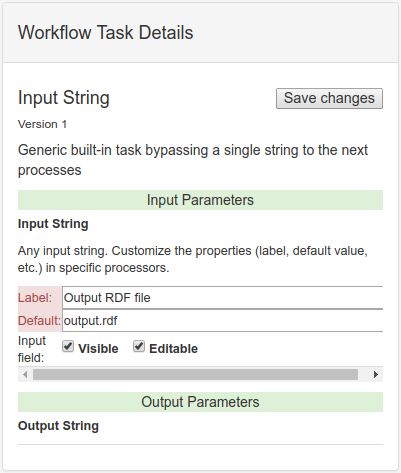

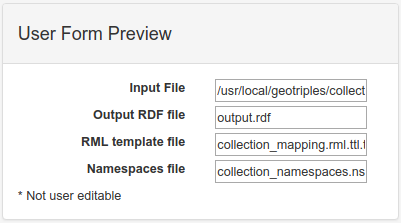

Creation of a New Workflow¶